---

title: pgvector, PostgresMLに対応したVMware Greenplum® 7をvSphere上のRocky Linux VMにインストールするメモ

tags: ["Greenplum", "Tanzu", "PostgreSQL", "Rocky", "pgvector", "PostgresML", "vSphere", "Machine Learning"]

categories: ["Middleware", "RDBMS", "Greenplum"]

date: 2023-10-02T06:52:05Z

updated: 2023-10-31T08:01:23Z

---

[VMware Greenplum®](https://network.tanzu.vmware.com/products/vmware-greenplum) 7がリリースされました。[pgvector](https://github.com/pgvector/pgvector) や[PostgresML](https://postgresml.org/)に対応し、AI対応が強化されています。

VMware Greenplum® 7を vSphere上にインストールしてみます。

Coordinator 1台 (`gp-coordinator`) と Segment 2台 (`gp-segment1`, `gp-segment2`) の構成を作ります。本記事ではVMware Greenplum® 7.0.0をインストールします。

基本的には [インストールガイド](https://docs.vmware.com/en/VMware-Greenplum/7/greenplum-database/install_guide-install_guide.html) にしたがって作業しましたが、以下の手順はとりあえず動作させるための設定しか行っておらず、推奨設定を全て実施しているわけではありません。

自己責任でご参照ください。

**目次**

### Rocky Linux VMの作成

LinuxのDistroには [サポートされている](https://docs.vmware.com/en/VMware-Greenplum/7/greenplum-database/install_guide-platform-requirements-overview.html#operating-systems) Rocky Linux 8を使用します。

ISOファイルからVMを作成します。

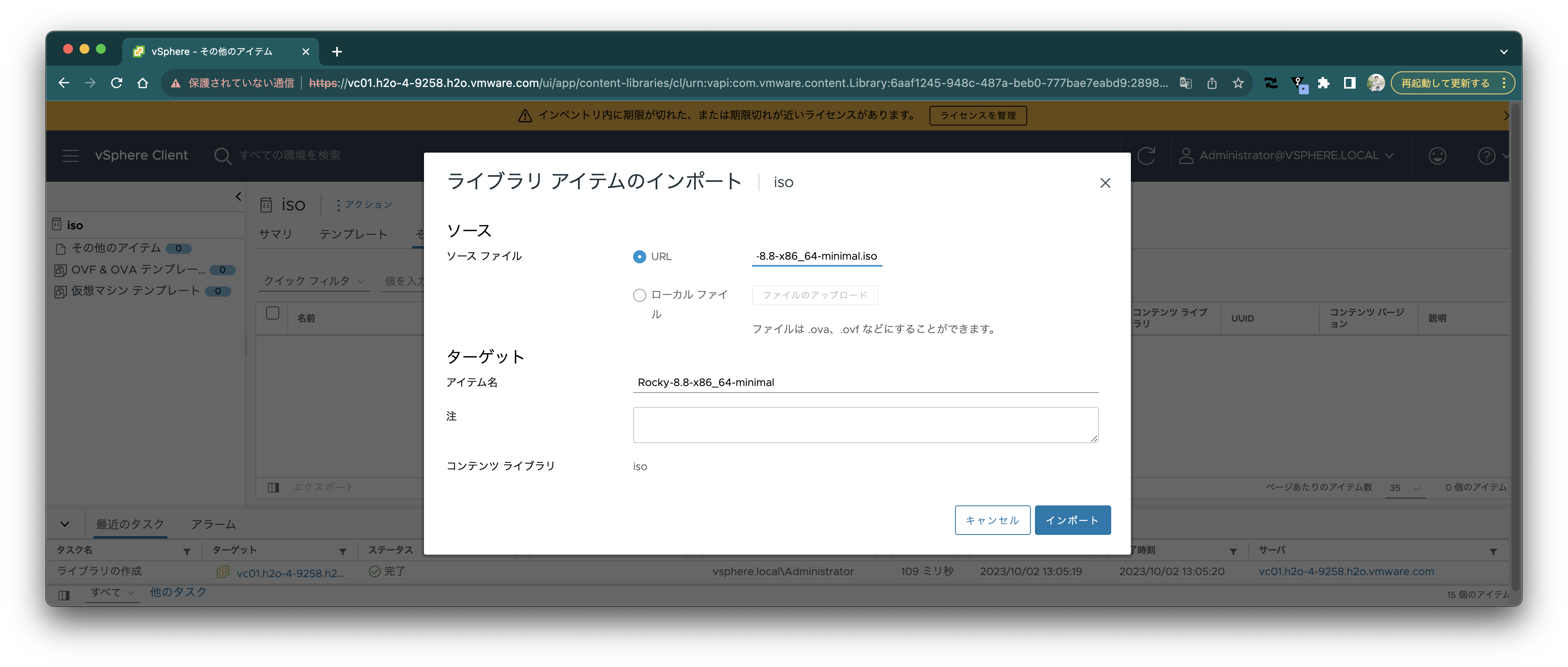

https://download.rockylinux.org/pub/rocky/8/isos/x86_64/Rocky-8.8-x86_64-minimal.iso のISOファイルをコンテンツライブラリに保存しておきます。

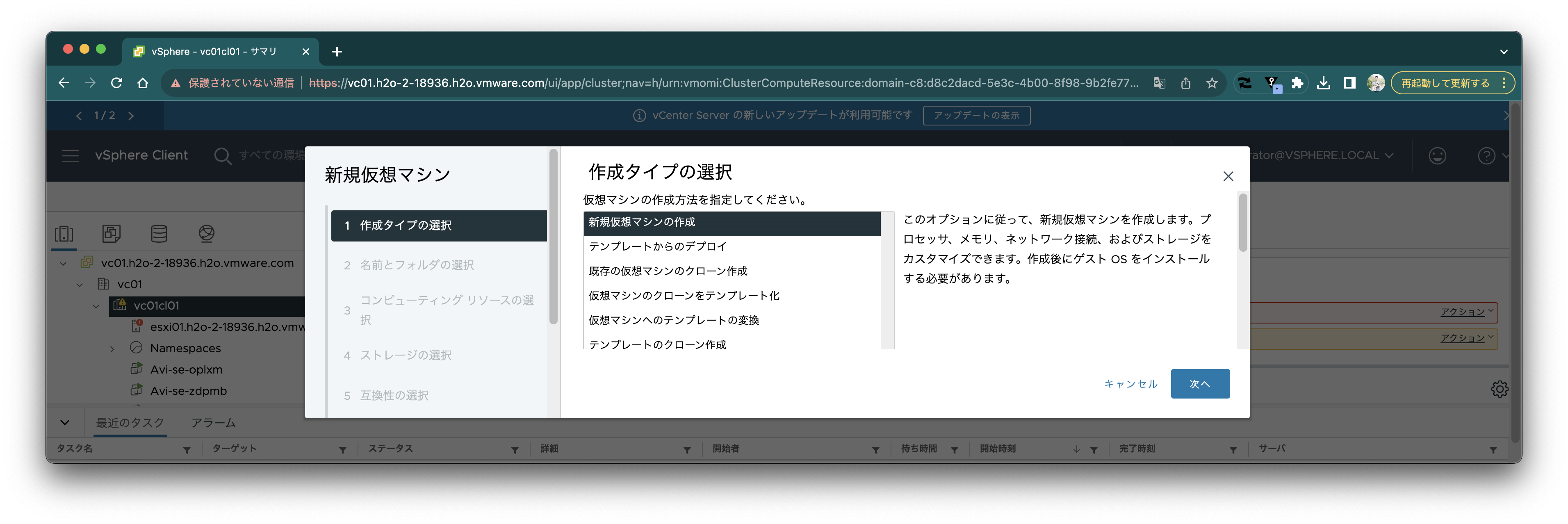

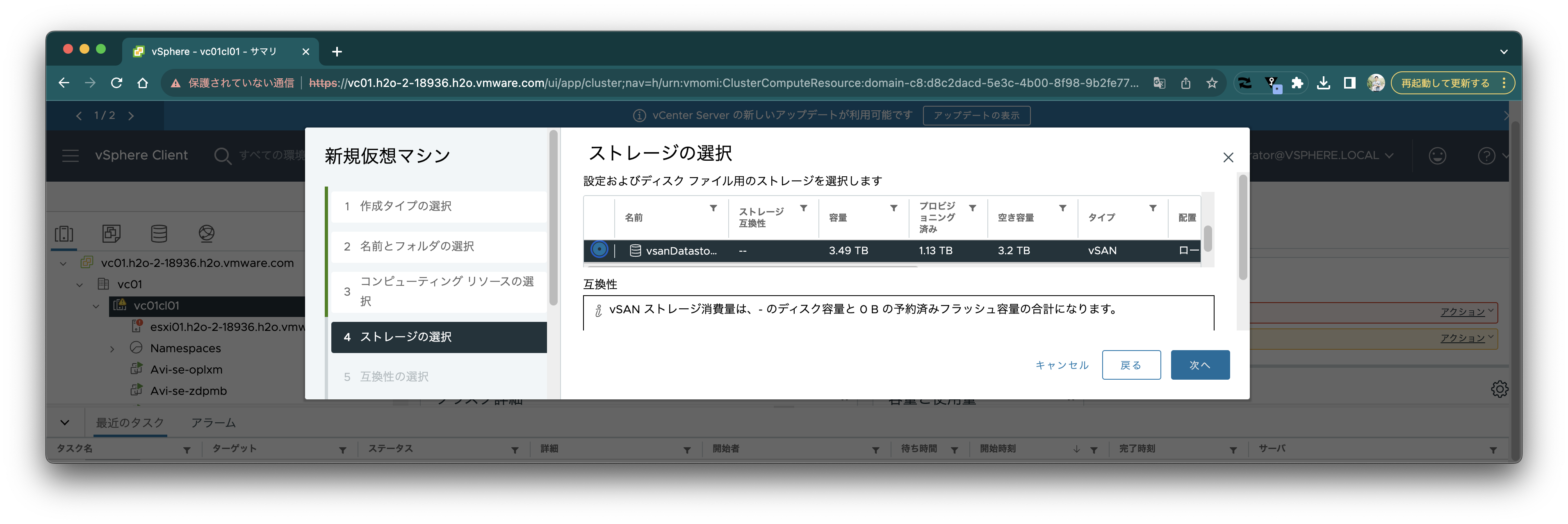

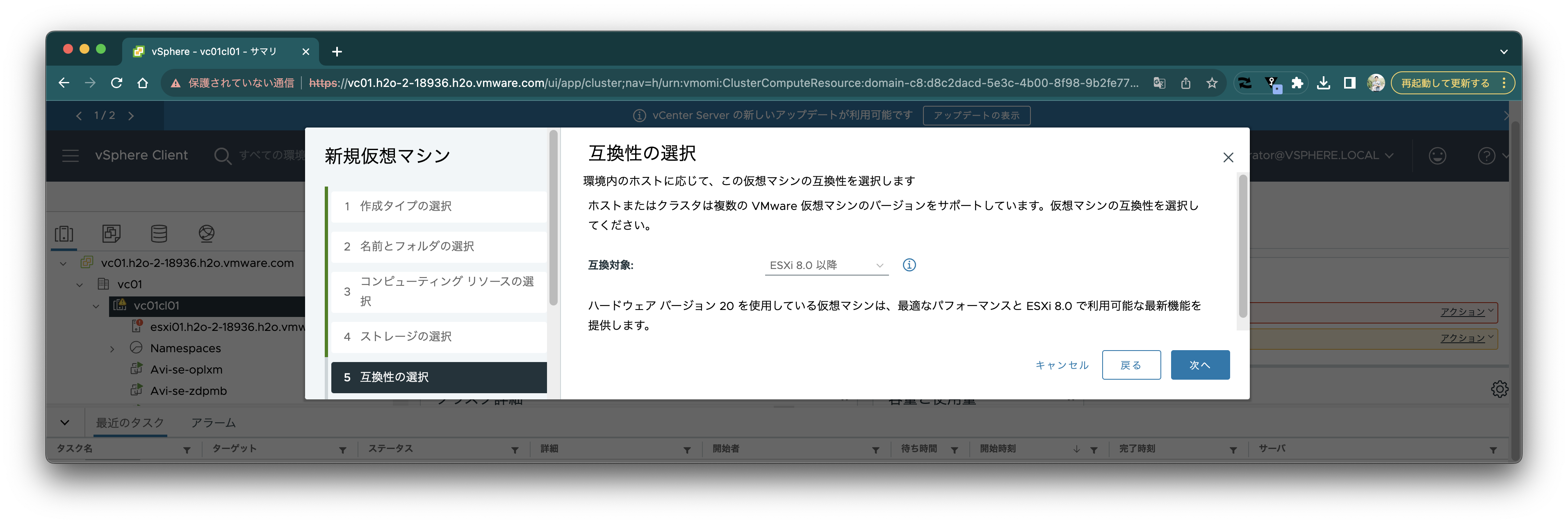

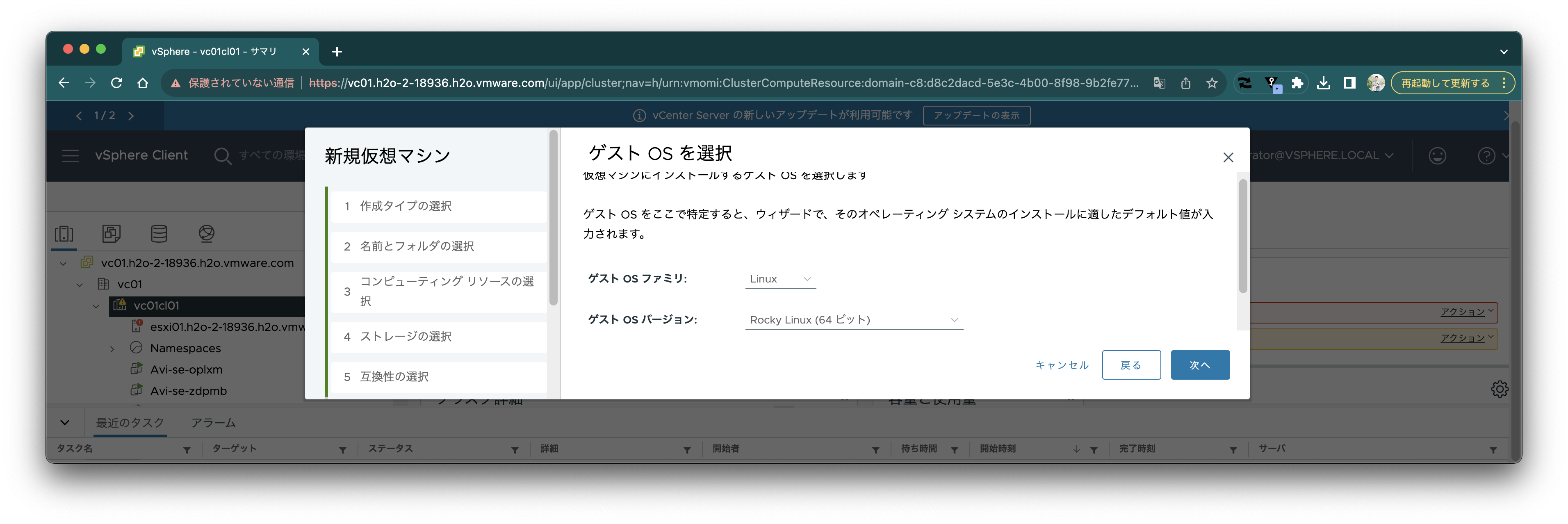

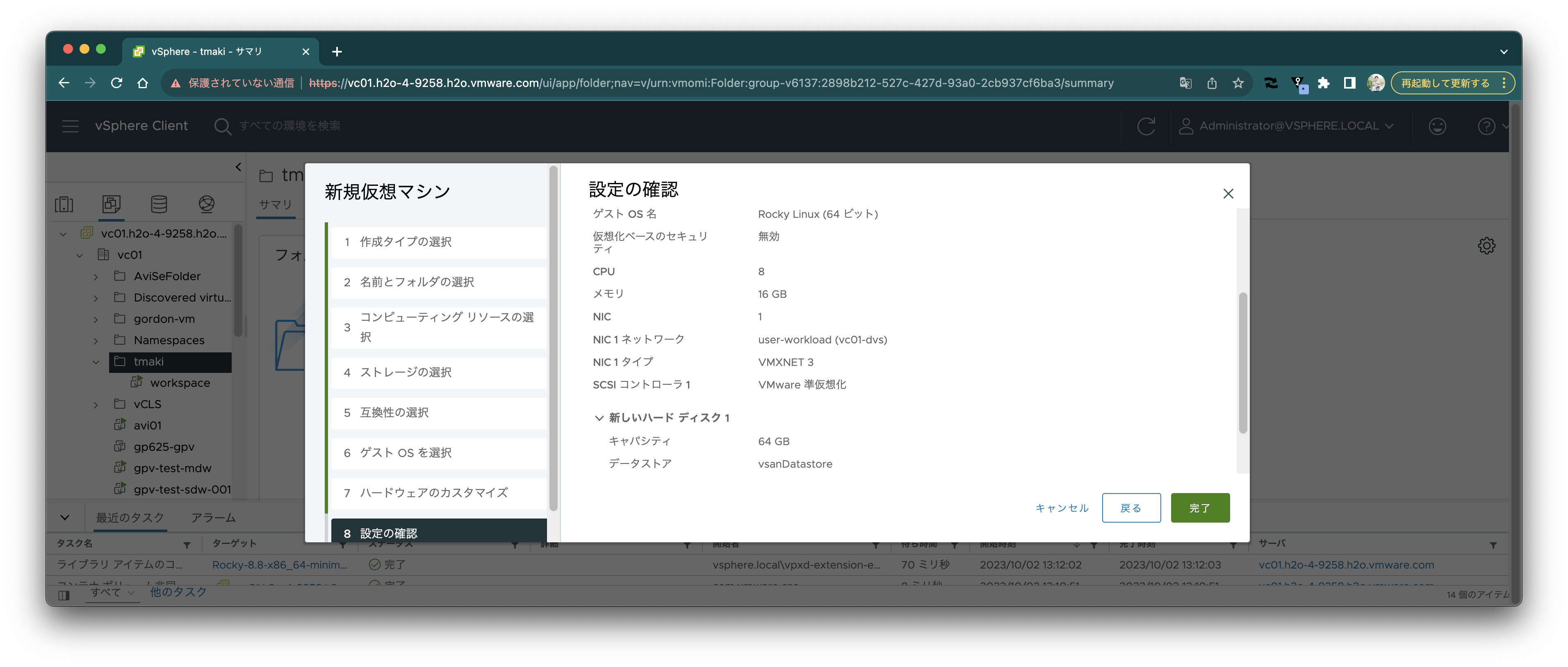

VMを新規作成します。

VMを新規作成します。

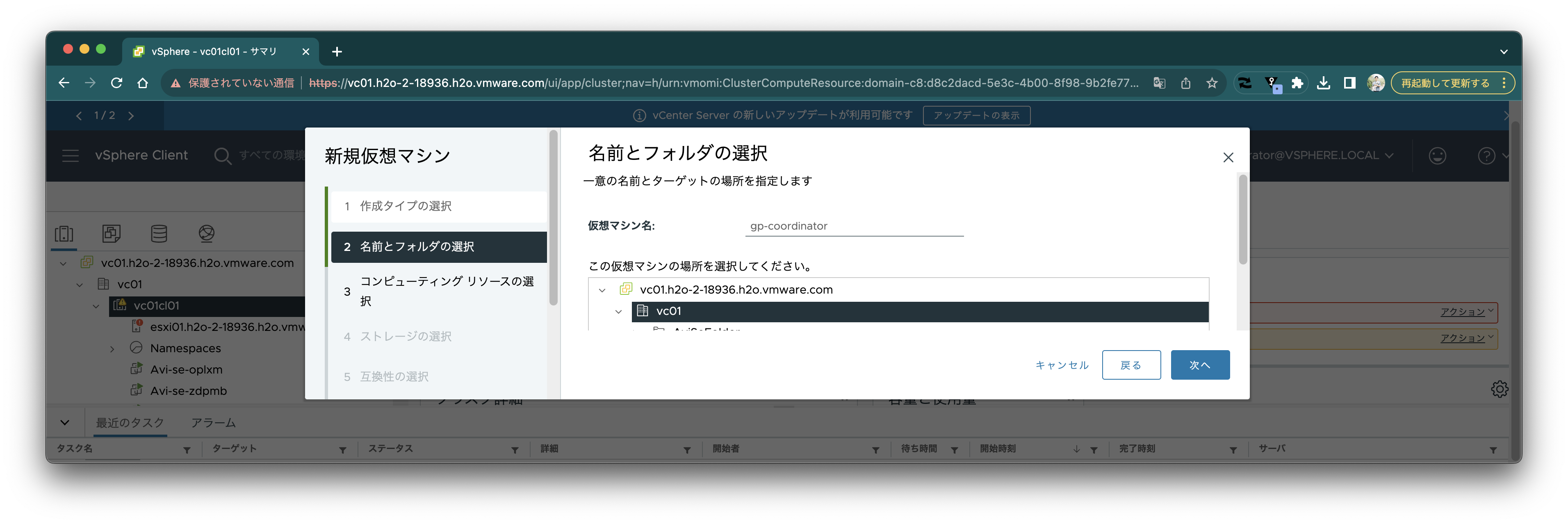

名前は`gp-coordinator`にします。

名前は`gp-coordinator`にします。

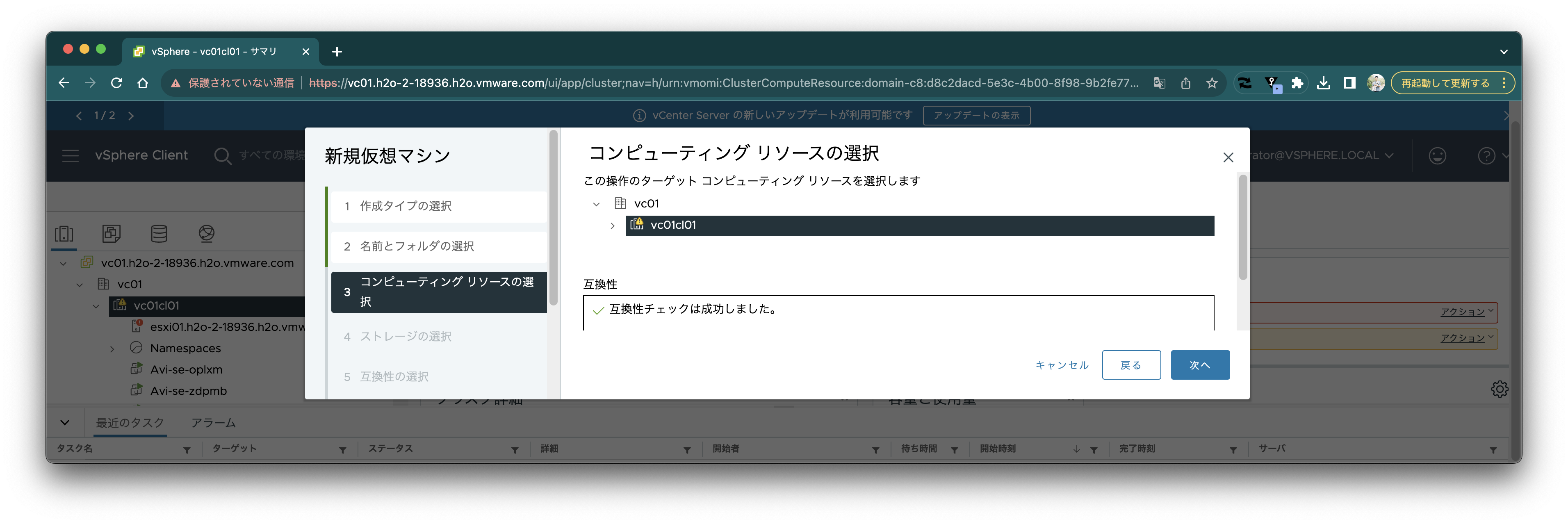

以下、適当に選択します。

以下、適当に選択します。

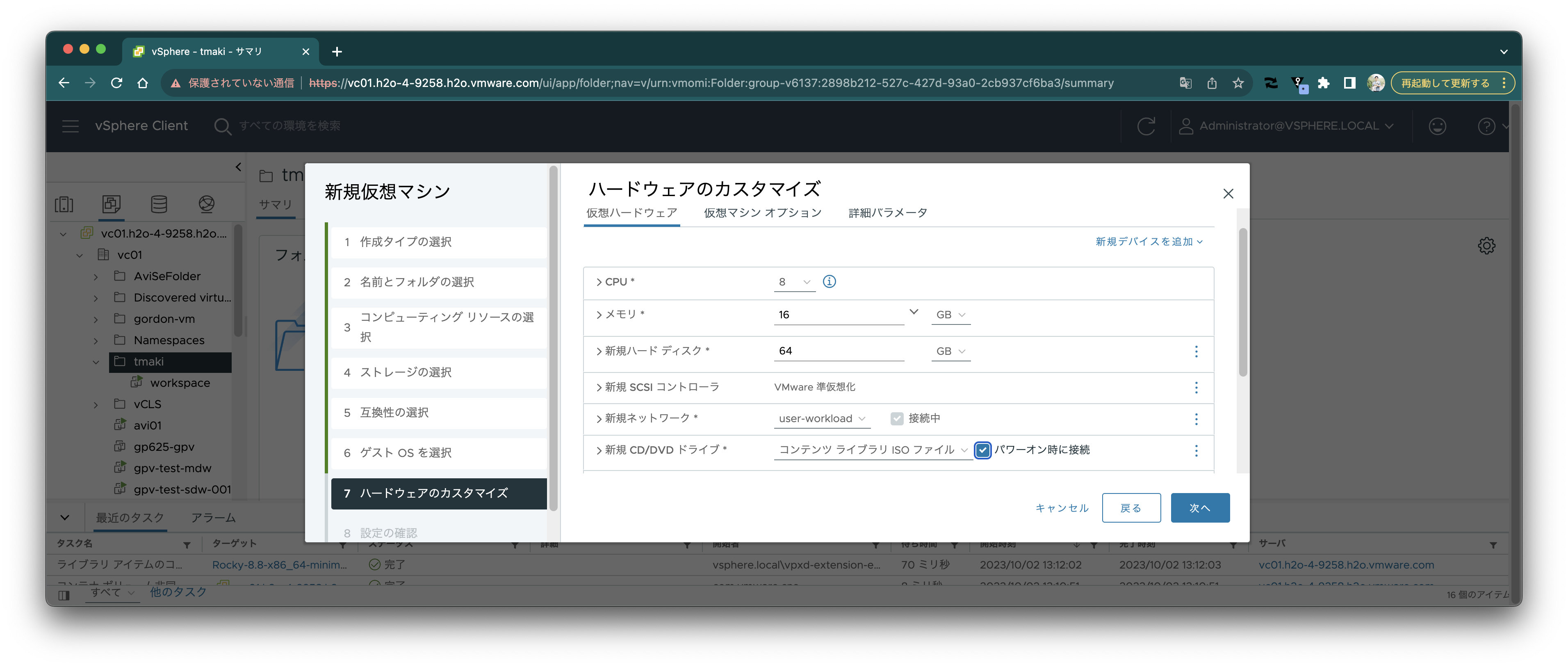

CPUは8、メモリは16GBにしましたが、もっと小さくても動作すると思います。CD/DVDドライブにコンテンツライブラリを指定し、ISOイメージをマウントします。

CPUは8、メモリは16GBにしましたが、もっと小さくても動作すると思います。CD/DVDドライブにコンテンツライブラリを指定し、ISOイメージをマウントします。

VMを作成します。

VMを作成します。

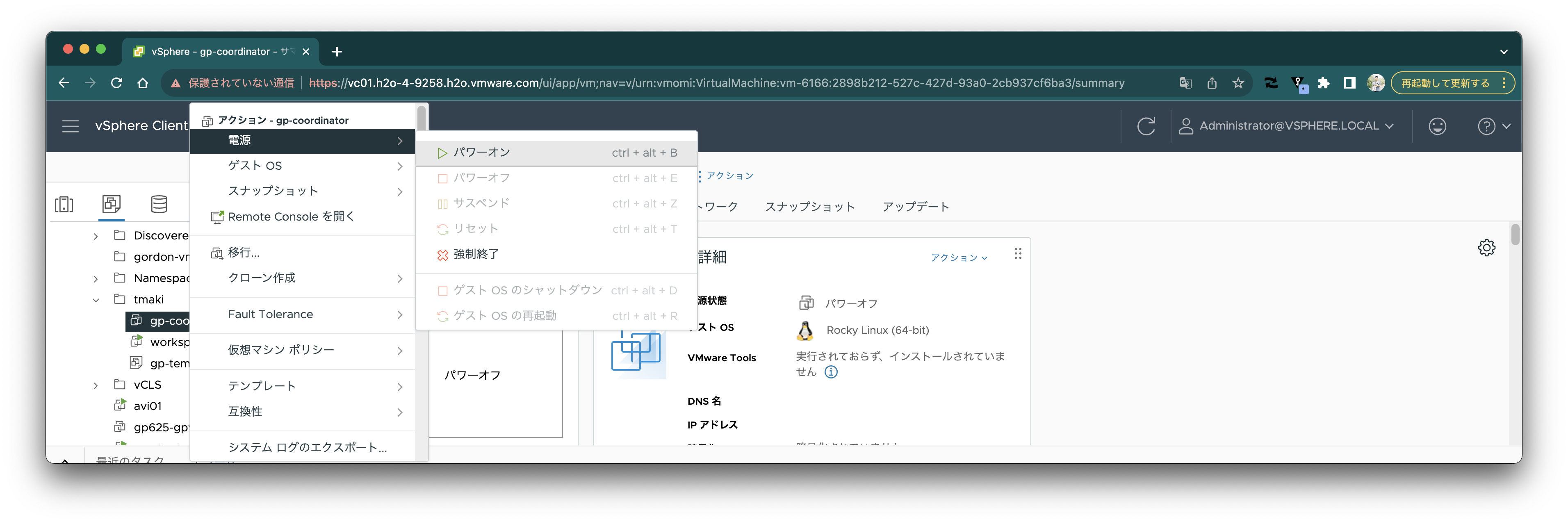

パワーオンします。

パワーオンします。

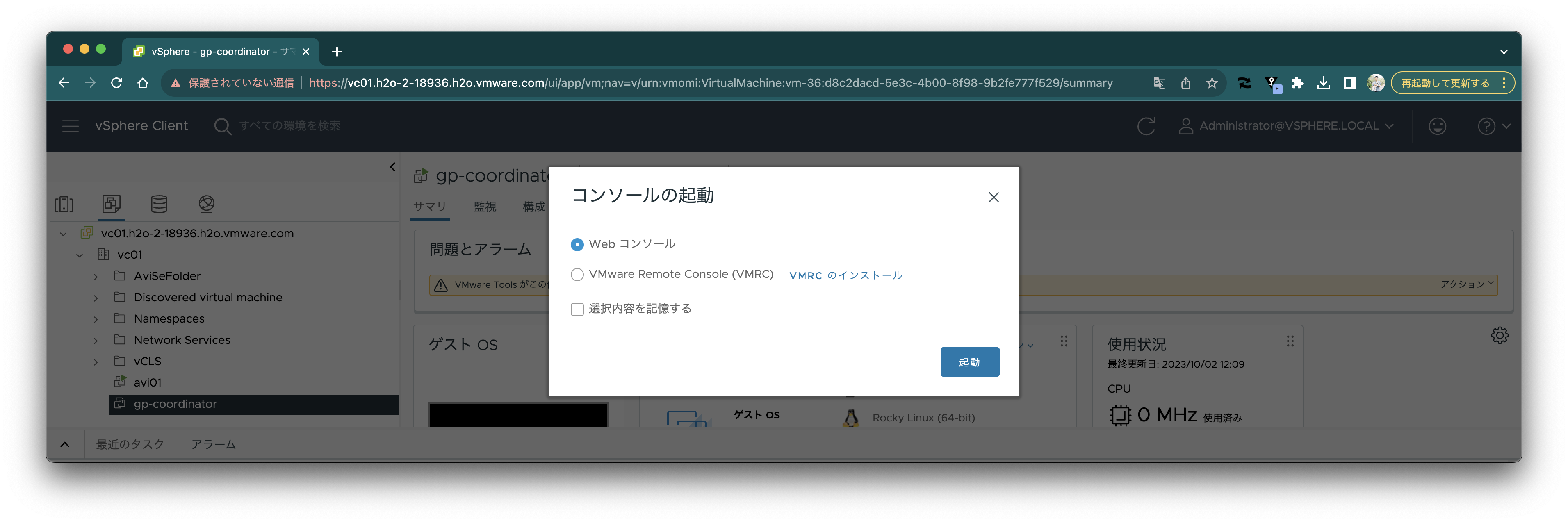

コンソールを起動します。

コンソールを起動します。

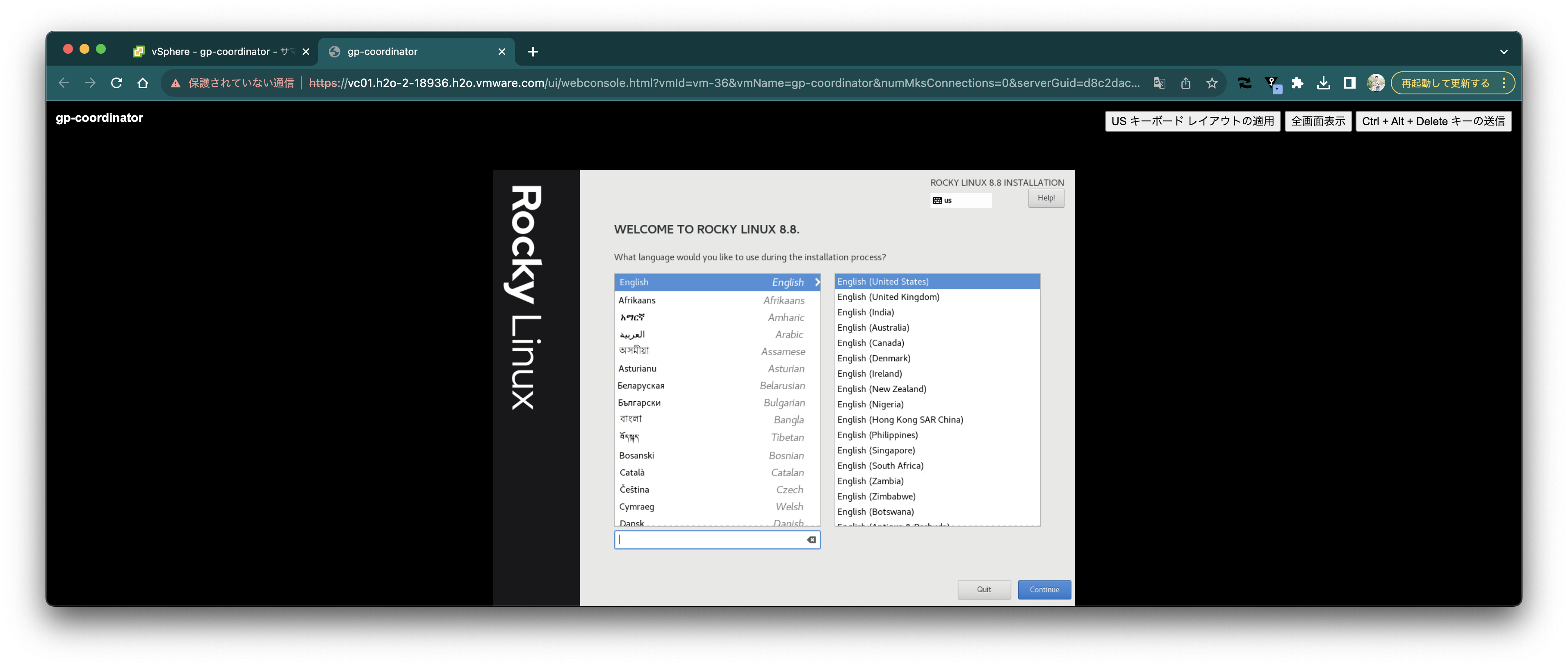

"Install Rocky Linux 8.8" を選択します。

"Install Rocky Linux 8.8" を選択します。

Englishを選択。

Englishを選択。

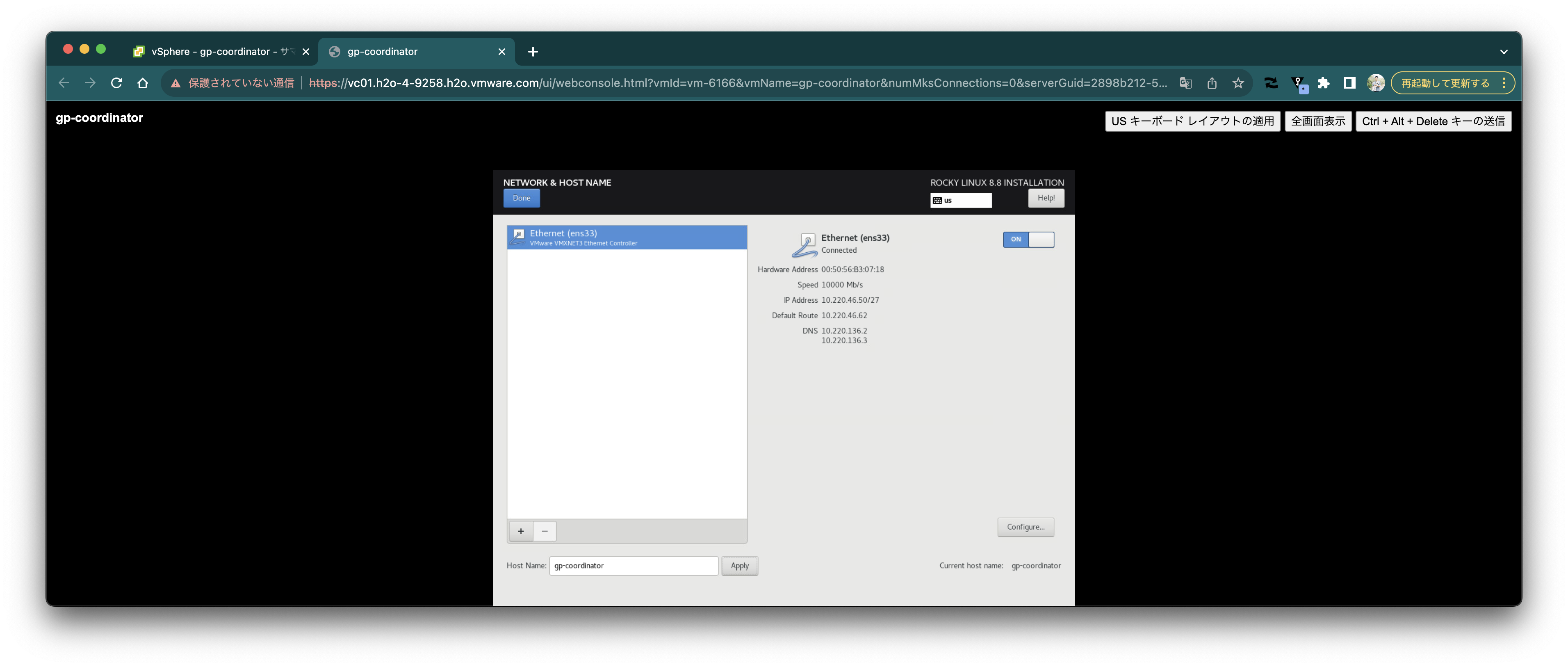

Networkを選択し、ONにします。IPアドレス(ここでは10.220.46.50)を控えておきます。Hostnameも`gp-coordinator`にします。"Done"をクリックします。

Networkを選択し、ONにします。IPアドレス(ここでは10.220.46.50)を控えておきます。Hostnameも`gp-coordinator`にします。"Done"をクリックします。

rootユーザーのパスワードを設定し、"Done"をクリックします。

rootユーザーのパスワードを設定し、"Done"をクリックします。

(オプショナルで)Timezoneを選択し、"Done"をクリックします。

(オプショナルで)Timezoneを選択し、"Done"をクリックします。

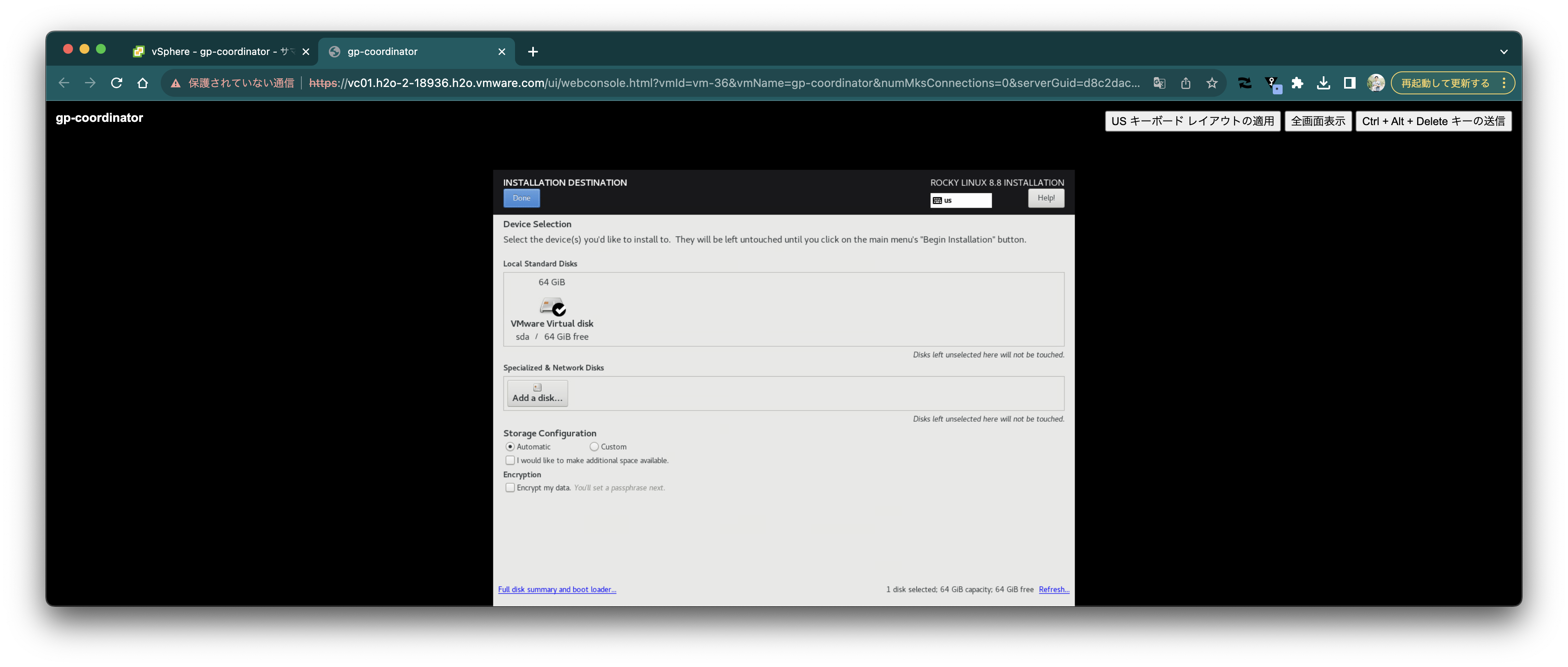

Installation Destinationはデフォルトのまま、"Done"をクリックします。

Installation Destinationはデフォルトのまま、"Done"をクリックします。

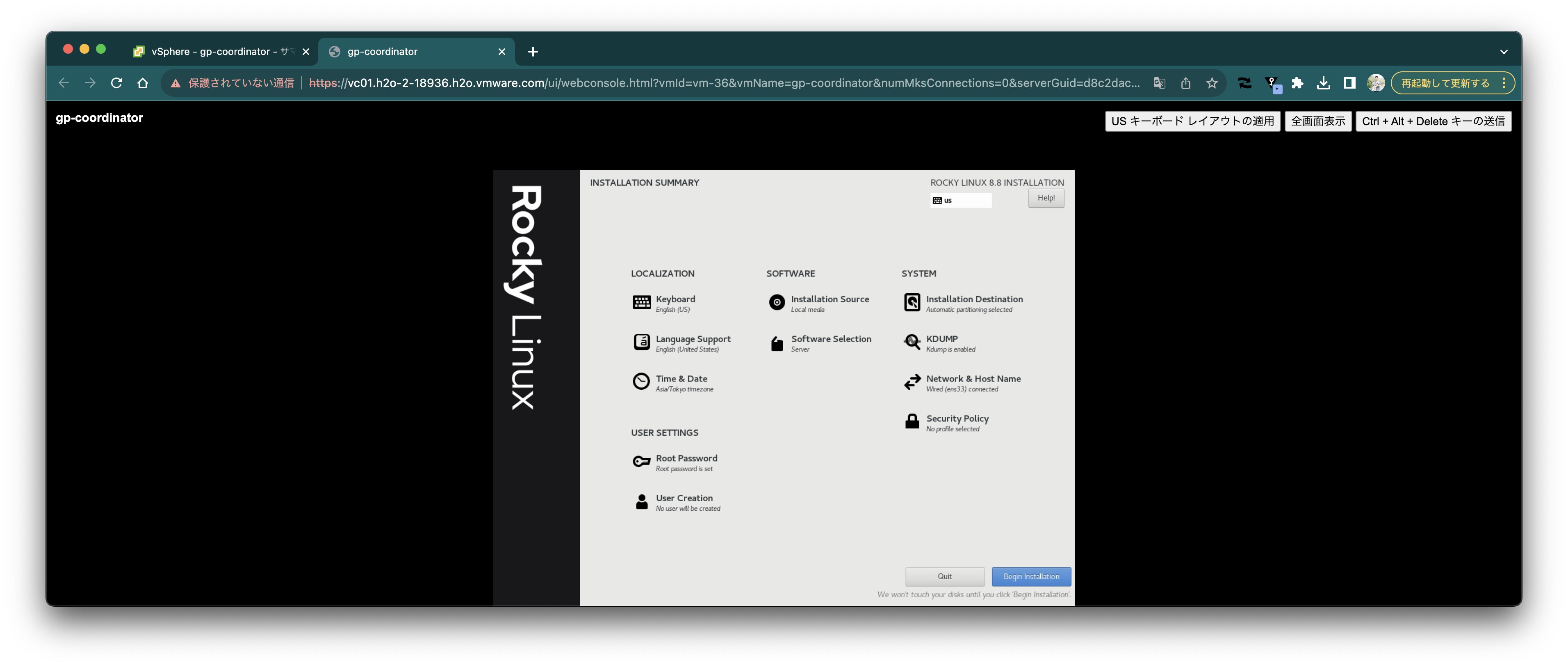

"Begin Installation"をクリックします。

"Begin Installation"をクリックします。

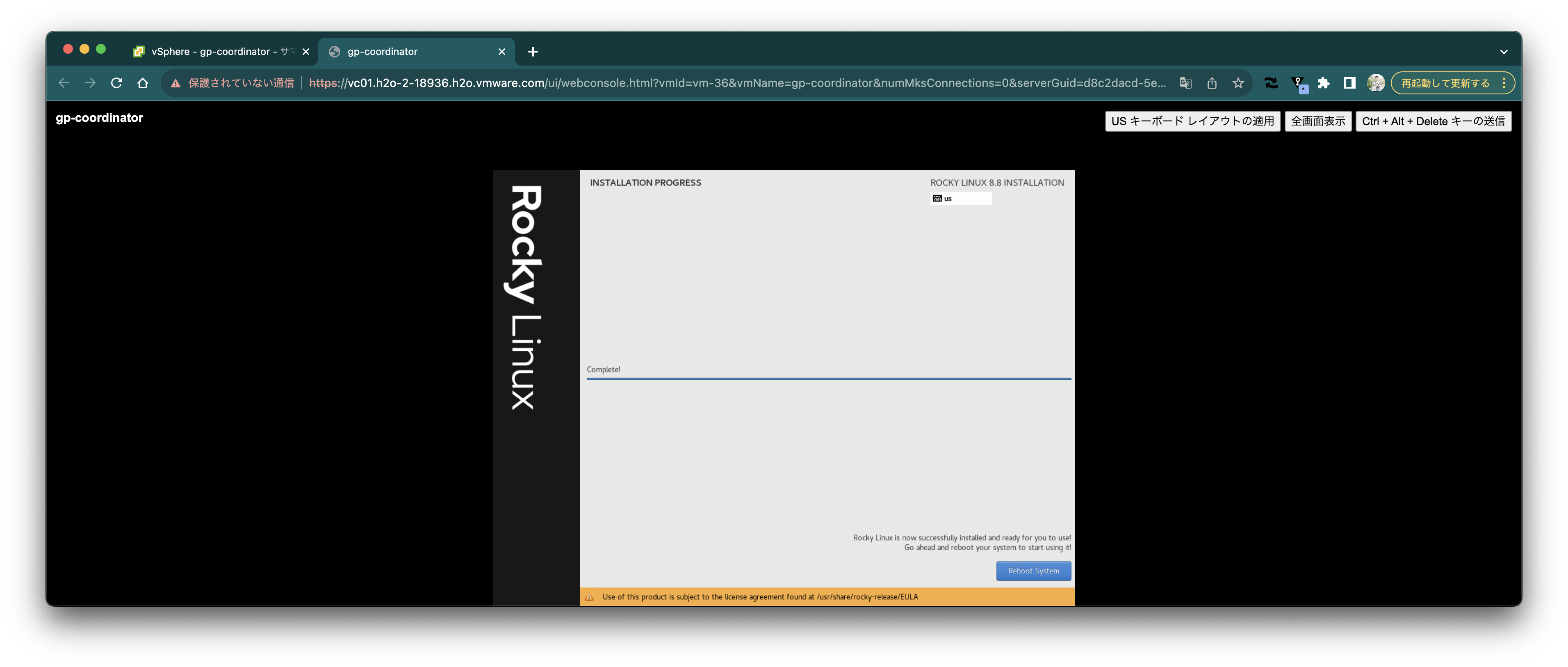

完了したら"Reboot System"をクリックします。

完了したら"Reboot System"をクリックします。

ターミナルを開き、控えたIPアドレスに対してrootユーザーでsshログインします。

```

ssh root@10.220.46.50

```

### OSの初期セットアップ

`gp-coordinator` VM上で次のコマンドを実行します

```bash

sudo yum -y install epel-release

sudo yum -y config-manager --set-enabled powertools

sudo yum -y install apr \

apr-util \

bash \

bzip2 \

curl \

lsof \

cmake \

bind-utils \

krb5-libs \

libcgroup-tools \

libcurl \

libevent \

libxml2 \

libyaml \

zlib \

openldap \

openssh-clients \

openssh-server \

openssl \

openssl-libs \

sshpass \

perl \

python39 \

readline \

rsync \

R \

sed \

tar \

zip \

apr \

apr-util \

libyaml \

libevent \

java-11-openjdk-devel

echo 2 | sudo update-alternatives --config java

cat < /dev/null

net.ipv4.ip_local_reserved_ports=65330

EOF

sudo sysctl --system

cat < /dev/null

* soft nofile 65536

* hard nofile 65536

EOF

```

### VMware Greenplum® のダウンロード

VMware Greenplum® のrpmをダウンロードします。ここでは [`pivnet`](https://github.com/pivotal-cf/pivnet-cli) CLIを使用します。API Tokenは [こちら](https://network.tanzu.vmware.com/users/dashboard/edit-profile) から取得できます。

```bash

mkdir -p ~/Downloads/

cd ~/Downloads

curl -sL https://github.com/pivotal-cf/pivnet-cli/releases/download/v3.0.1/pivnet-linux-amd64-3.0.1 > pivnet

chmod +x pivnet

sudo mv pivnet /usr/local/bin/

pivnet login --api-token=*********************-r

pivnet download-product-files --product-slug='vmware-greenplum' --release-version='7.0.0' --glob='*.rpm'

pivnet download-product-files --product-slug='vmware-greenplum' --release-version='7.0.0' --glob='*.gppkg'

pivnet download-product-files --product-slug='vmware-greenplum' --release-version='7.0.0' --glob='*.tar.gz'

```

### VMware Greenplum®のインストール

`gpadmin`ユーザーを作ってrpmをダウンロードしたインストールします。

`gp-coordinator`,`gp-segment1`, `gp-segment2` それぞれで以下を実行します。

```bash

sudo groupadd gpadmin

sudo useradd -m gpadmin -g gpadmin

echo gpadmin:Greenplum123 | sudo chpasswd

echo 'gpadmin ALL=(ALL) NOPASSWD: ALL' | sudo EDITOR='tee -a' visudo

sudo yum -y install ./greenplum-db-7.0.0-el8-x86_64.rpm

sudo chown -R gpadmin:gpadmin /usr/local/greenplum*

sudo chown -R gpadmin:gpadmin /root/Downloads

sudo chgrp -R gpadmin /usr/local/greenplum*

```

Greenplum用の環境変数などが設定されたスクリプトを `.bashrc` で読み込むようにします。

```bash

cat <

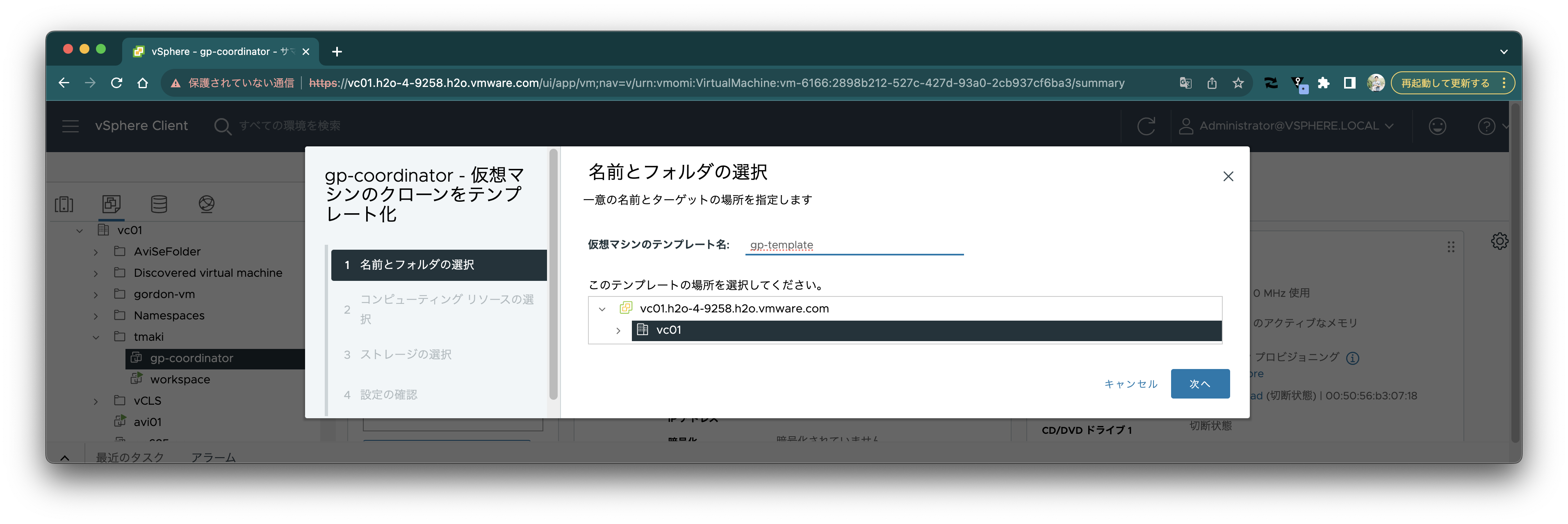

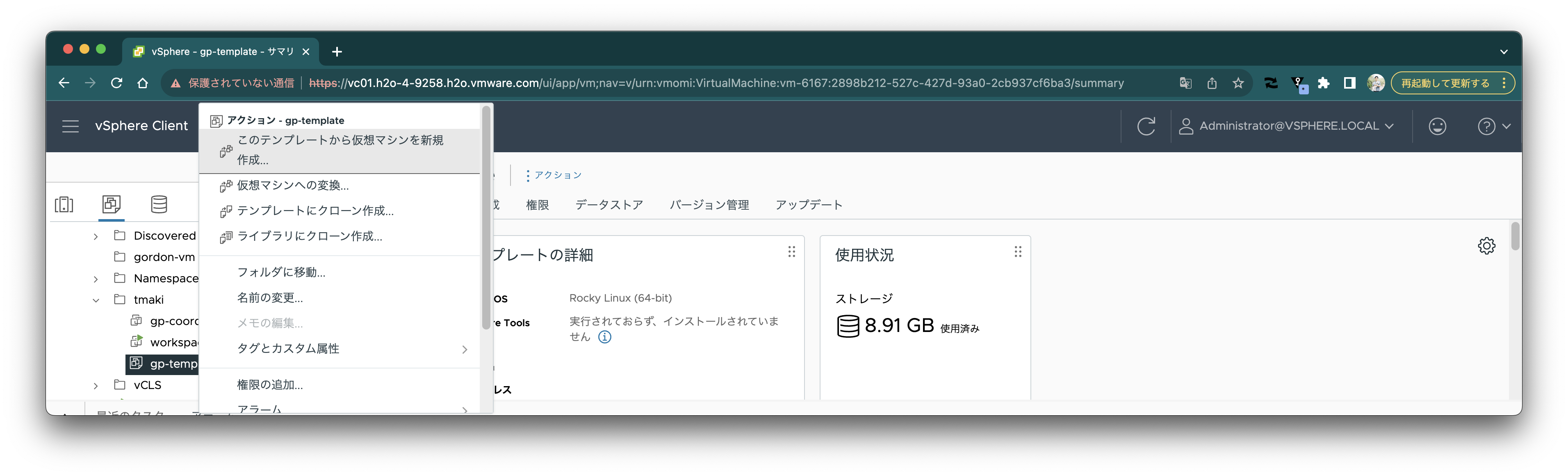

"クローン作成" => "テンプレートにクローン作成" を選択します。

ターミナルを開き、控えたIPアドレスに対してrootユーザーでsshログインします。

```

ssh root@10.220.46.50

```

### OSの初期セットアップ

`gp-coordinator` VM上で次のコマンドを実行します

```bash

sudo yum -y install epel-release

sudo yum -y config-manager --set-enabled powertools

sudo yum -y install apr \

apr-util \

bash \

bzip2 \

curl \

lsof \

cmake \

bind-utils \

krb5-libs \

libcgroup-tools \

libcurl \

libevent \

libxml2 \

libyaml \

zlib \

openldap \

openssh-clients \

openssh-server \

openssl \

openssl-libs \

sshpass \

perl \

python39 \

readline \

rsync \

R \

sed \

tar \

zip \

apr \

apr-util \

libyaml \

libevent \

java-11-openjdk-devel

echo 2 | sudo update-alternatives --config java

cat < /dev/null

net.ipv4.ip_local_reserved_ports=65330

EOF

sudo sysctl --system

cat < /dev/null

* soft nofile 65536

* hard nofile 65536

EOF

```

### VMware Greenplum® のダウンロード

VMware Greenplum® のrpmをダウンロードします。ここでは [`pivnet`](https://github.com/pivotal-cf/pivnet-cli) CLIを使用します。API Tokenは [こちら](https://network.tanzu.vmware.com/users/dashboard/edit-profile) から取得できます。

```bash

mkdir -p ~/Downloads/

cd ~/Downloads

curl -sL https://github.com/pivotal-cf/pivnet-cli/releases/download/v3.0.1/pivnet-linux-amd64-3.0.1 > pivnet

chmod +x pivnet

sudo mv pivnet /usr/local/bin/

pivnet login --api-token=*********************-r

pivnet download-product-files --product-slug='vmware-greenplum' --release-version='7.0.0' --glob='*.rpm'

pivnet download-product-files --product-slug='vmware-greenplum' --release-version='7.0.0' --glob='*.gppkg'

pivnet download-product-files --product-slug='vmware-greenplum' --release-version='7.0.0' --glob='*.tar.gz'

```

### VMware Greenplum®のインストール

`gpadmin`ユーザーを作ってrpmをダウンロードしたインストールします。

`gp-coordinator`,`gp-segment1`, `gp-segment2` それぞれで以下を実行します。

```bash

sudo groupadd gpadmin

sudo useradd -m gpadmin -g gpadmin

echo gpadmin:Greenplum123 | sudo chpasswd

echo 'gpadmin ALL=(ALL) NOPASSWD: ALL' | sudo EDITOR='tee -a' visudo

sudo yum -y install ./greenplum-db-7.0.0-el8-x86_64.rpm

sudo chown -R gpadmin:gpadmin /usr/local/greenplum*

sudo chown -R gpadmin:gpadmin /root/Downloads

sudo chgrp -R gpadmin /usr/local/greenplum*

```

Greenplum用の環境変数などが設定されたスクリプトを `.bashrc` で読み込むようにします。

```bash

cat <

"クローン作成" => "テンプレートにクローン作成" を選択します。

テンプレート名を`gp-template`にし、適当な場所に保存します。

テンプレート名を`gp-template`にし、適当な場所に保存します。

`gp-coordinator`を再びパワーオンしておきます。

`gp-coordinator`を再びパワーオンしておきます。

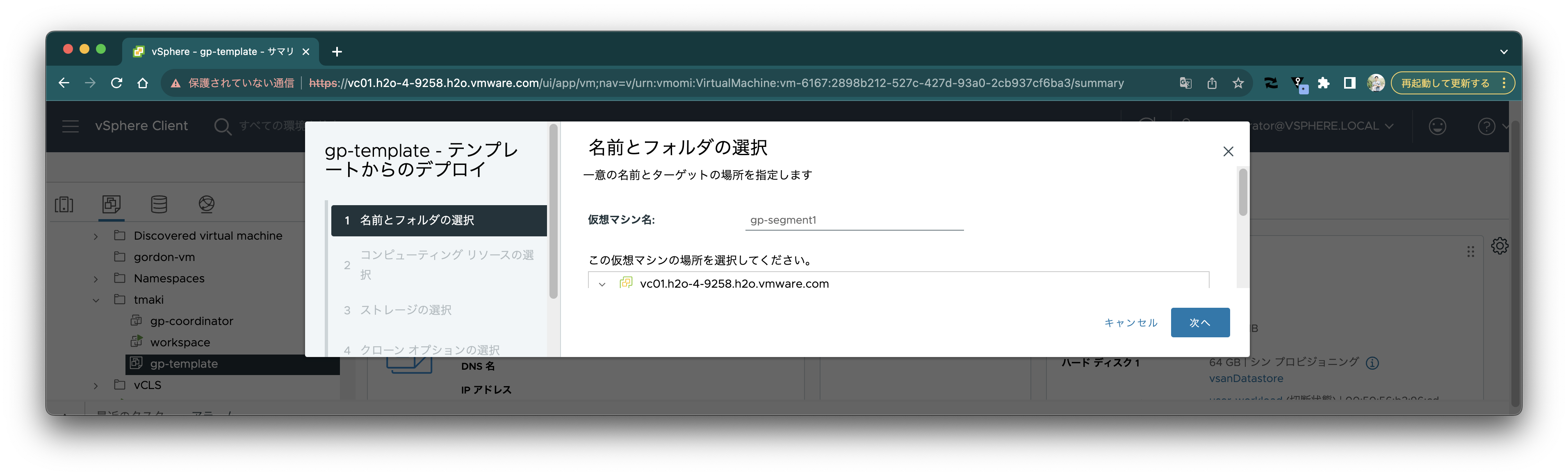

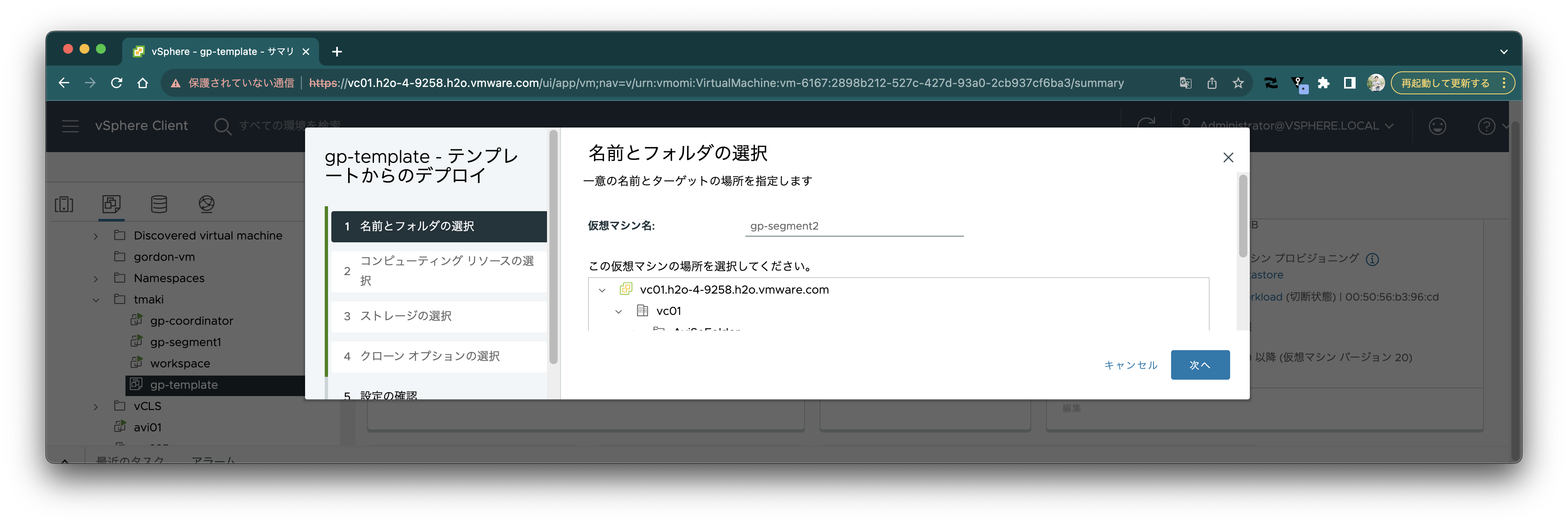

`gp-template`テンプレートから仮想マシンを作成します。

`gp-template`テンプレートから仮想マシンを作成します。

名前は`gp-segment1`にします。

名前は`gp-segment1`にします。

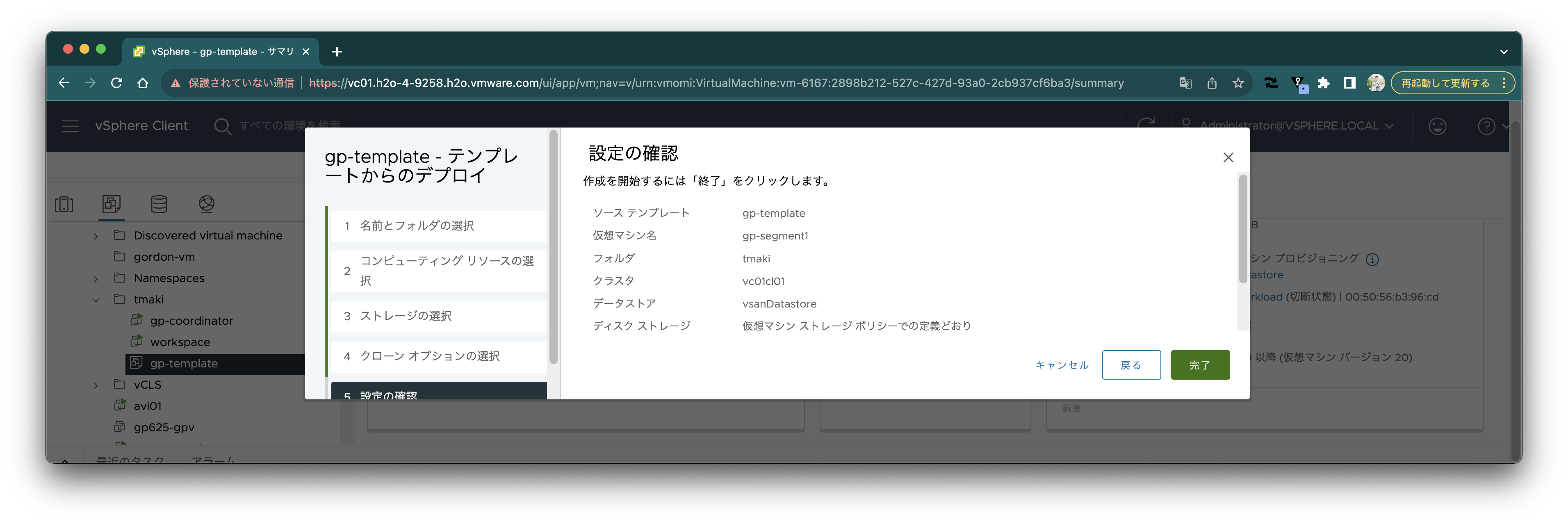

"作成後に仮想マシンをパワーオン" にチェックを入れます。

"作成後に仮想マシンをパワーオン" にチェックを入れます。

VMを作成します。

VMを作成します。

同じ手順で、`gp-segment2`も作成します。

同じ手順で、`gp-segment2`も作成します。

`gp-segment1`のWebコンソールを開きます。

`gp-segment1`のWebコンソールを開きます。

次のコマンドを実行し、hostnameを変更します。

```

hostnamectl set-hostname gp-segment1

```

また、`ip addr`コマンドでIPアドレスを確認します。

同様に`gp-segment2`のWebコンソールを開き、次のコマンドを実行し、hostnameを変更します。

```

hostnamectl set-hostname gp-segment2

```

また、`ip addr`コマンドでIPアドレスを確認します。

`gp-coordinator`、`gp-segment1`、`gp-segment2`それぞれで次のコマンドを実行し、`/etc/hosts`ファイルにそれぞれのIPアドレスを記述します。IPアドレスは自分の環境に合わせて変更してください。

```

cat < /dev/null

10.220.46.50 gp-coordinator

10.220.46.55 gp-segment1

10.220.46.56 gp-segment2

127.0.0.1 localhost

EOF

```

この記事ではDHCPのまま作業しますが、必要に応じてstatic ipを設定してください。

### SSHの設定

各ノード間をパスワードレスでssh通信できるようにする必要があります。

まずは`gp-coordinator`、`gp-segment1`、`gp-segment2`それぞれで次のコマンドを実行して鍵を生成します。

```bash

sudo su - gpadmin bash -c 'ssh-keygen -m PEM -t rsa -b 4096 -q -N "" -f /home/gpadmin/.ssh/id_rsa'

```

以下の作業は `gp-coordinator` 上でのみで行います。`gpadmin` ユーザーで作業します。

`gp-coordinator` の公開鍵を各ホストの `/home/gpadmin/.ssh/authorized_keys` に追記します。

```bash

sudo su - gpadmin

SSHPASS=Greenplum123 sshpass -e ssh-copy-id -o StrictHostKeyChecking=no gp-coordinator

SSHPASS=Greenplum123 sshpass -e ssh-copy-id -o StrictHostKeyChecking=no gp-segment1

SSHPASS=Greenplum123 sshpass -e ssh-copy-id -o StrictHostKeyChecking=no gp-segment2

```

次のコマンドで各ホストの公開鍵を各ホストの `/home/gpadmin/.ssh/known_hosts` に追記します。

```bash

cat < hostfile_exkeys

gp-coordinator

gp-segment1

gp-segment2

EOF

gpssh-exkeys -f hostfile_exkeys

```

### VMware Greenplum®のセットアップ

以下の作業は `gp-coordinator` 上でのみで行います。引き続き `gpadmin` ユーザーで作業します。

次のコマンドで各ホスト用のディレクトリの作成をします。

```bash

sudo mkdir -p /data/coordinator

sudo chown gpadmin:gpadmin /data/coordinator

cat < hostfile_gpssh_segonly

gp-segment1

gp-segment2

EOF

gpssh -f hostfile_gpssh_segonly -e 'sudo mkdir -p /data/primary'

gpssh -f hostfile_gpssh_segonly -e 'sudo mkdir -p /data/mirror'

gpssh -f hostfile_gpssh_segonly -e 'sudo chown -R gpadmin /data/*'

```

次のコマンドでVMware Greenplum®を初期化します。

```bash

mkdir -p gpconfigs

cat < gpconfigs/hostfile_gpinitsystem

gp-segment1

gp-segment2

EOF

cp $GPHOME/docs/cli_help/gpconfigs/gpinitsystem_config /home/gpadmin/gpconfigs/gpinitsystem_config

sed -i.bak \

-e 's|/data1/primary /data1/primary /data1/primary /data2/primary /data2/primary /data2/primary|/data/primary|' \

-e 's|/data1/mirror /data1/mirror /data1/mirror /data2/mirror /data2/mirror /data2/mirror|/data/mirror|' \

-e 's/#MIRROR_PORT_BASE/MIRROR_PORT_BASE/' \

-e 's/#declare -a MIRROR_DATA_DIRECTORY/declare -a MIRROR_DATA_DIRECTORY/' \

-e 's/COORDINATOR_HOSTNAME=cdw/COORDINATOR_HOSTNAME=gp-coordinator/' \

gpconfigs/gpinitsystem_config

gpinitsystem -c gpconfigs/gpinitsystem_config -h gpconfigs/hostfile_gpinitsystem

```

次のようなログが出力され、確認が求められます。

```

20231002:14:33:40:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Checking configuration parameters, please wait...

20231002:14:33:40:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Reading Greenplum configuration file gpconfigs/gpinitsystem_config

20231002:14:33:40:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Locale has not been set in gpconfigs/gpinitsystem_config, will set to default value

20231002:14:33:40:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-No DATABASE_NAME set, will exit following template1 updates

20231002:14:33:40:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-COORDINATOR_MAX_CONNECT not set, will set to default value 250

20231002:14:33:40:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Checking configuration parameters, Completed

20231002:14:33:40:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Commencing multi-home checks, please wait...

..

20231002:14:33:41:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Configuring build for standard array

20231002:14:33:41:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Commencing multi-home checks, Completed

20231002:14:33:41:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Building primary segment instance array, please wait...

..

20231002:14:33:43:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Building group mirror array type , please wait...

..

20231002:14:33:45:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Checking Coordinator host

20231002:14:33:45:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Checking new segment hosts, please wait...

....

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Checking new segment hosts, Completed

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Greenplum Database Creation Parameters

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:---------------------------------------

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Coordinator Configuration

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:---------------------------------------

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Coordinator hostname = gp-coordinator

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Coordinator port = 5432

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Coordinator instance dir = /data/coordinator/gpseg-1

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Coordinator LOCALE =

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Greenplum segment prefix = gpseg

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Coordinator Database =

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Coordinator connections = 250

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Coordinator buffers = 128000kB

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Segment connections = 750

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Segment buffers = 128000kB

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Encoding = UNICODE

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Postgres param file = Off

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Initdb to be used = /usr/local/greenplum-db-7.0.0/bin/initdb

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-GP_LIBRARY_PATH is = /usr/local/greenplum-db-7.0.0/lib

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-HEAP_CHECKSUM is = on

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-HBA_HOSTNAMES is = 0

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Ulimit check = Passed

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Array host connect type = Single hostname per node

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Coordinator IP address [1] = ::1

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Coordinator IP address [2] = 10.220.46.50

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Coordinator IP address [3] = fe80::250:56ff:feb3:718

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Standby Coordinator = Not Configured

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Number of primary segments = 1

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Total Database segments = 2

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Trusted shell = ssh

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Number segment hosts = 2

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Mirror port base = 7000

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Number of mirror segments = 1

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Mirroring config = ON

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Mirroring type = Group

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:----------------------------------------

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Greenplum Primary Segment Configuration

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:----------------------------------------

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-gp-segment1 6000 gp-segment1 /data/primary/gpseg0 2

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-gp-segment2 6000 gp-segment2 /data/primary/gpseg1 3

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:---------------------------------------

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Greenplum Mirror Segment Configuration

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:---------------------------------------

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-gp-segment2 7000 gp-segment2 /data/mirror/gpseg0 4

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-gp-segment1 7000 gp-segment1 /data/mirror/gpseg1 5

Continue with Greenplum creation Yy|Nn (default=N):

>

```

`y`を入力すると、次のようなログが出力されます。

```

20231002:14:33:54:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Building the Coordinator instance database, please wait...

20231002:14:33:57:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Starting the Coordinator in admin mode

20231002:14:33:57:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Commencing parallel build of primary segment instances

20231002:14:33:57:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Spawning parallel processes batch [1], please wait...

..

20231002:14:33:58:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Waiting for parallel processes batch [1], please wait...

............

20231002:14:34:10:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:------------------------------------------------

20231002:14:34:10:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Parallel process exit status

20231002:14:34:10:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:------------------------------------------------

20231002:14:34:10:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Total processes marked as completed = 2

20231002:14:34:10:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Total processes marked as killed = 0

20231002:14:34:10:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Total processes marked as failed = 0

20231002:14:34:10:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:------------------------------------------------

20231002:14:34:10:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Removing back out file

20231002:14:34:10:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-No errors generated from parallel processes

20231002:14:34:10:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Restarting the Greenplum instance in production mode

20231002:14:34:10:005447 gpstop:gp-coordinator:gpadmin-[INFO]:-Starting gpstop with args: -a -l /home/gpadmin/gpAdminLogs -m -d /data/coordinator/gpseg-1

20231002:14:34:10:005447 gpstop:gp-coordinator:gpadmin-[INFO]:-Gathering information and validating the environment...

20231002:14:34:10:005447 gpstop:gp-coordinator:gpadmin-[INFO]:-Obtaining Greenplum Coordinator catalog information

20231002:14:34:10:005447 gpstop:gp-coordinator:gpadmin-[INFO]:-Obtaining Segment details from coordinator...

20231002:14:34:10:005447 gpstop:gp-coordinator:gpadmin-[INFO]:-Greenplum Version: 'postgres (Greenplum Database) 7.0.0 build commit:0a7a3566873325aca1789ae6f818c80f17a9402d'

20231002:14:34:10:005447 gpstop:gp-coordinator:gpadmin-[INFO]:-Commencing Coordinator instance shutdown with mode='smart'

20231002:14:34:10:005447 gpstop:gp-coordinator:gpadmin-[INFO]:-Coordinator segment instance directory=/data/coordinator/gpseg-1

20231002:14:34:11:005447 gpstop:gp-coordinator:gpadmin-[INFO]:-Stopping coordinator segment and waiting for user connections to finish ...

server shutting down

20231002:14:34:12:005447 gpstop:gp-coordinator:gpadmin-[INFO]:-Attempting forceful termination of any leftover coordinator process

20231002:14:34:12:005447 gpstop:gp-coordinator:gpadmin-[INFO]:-Terminating processes for segment /data/coordinator/gpseg-1

20231002:14:34:15:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Starting gpstart with args: -a -l /home/gpadmin/gpAdminLogs -d /data/coordinator/gpseg-1

20231002:14:34:15:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Gathering information and validating the environment...

20231002:14:34:15:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Greenplum Binary Version: 'postgres (Greenplum Database) 7.0.0 build commit:0a7a3566873325aca1789ae6f818c80f17a9402d'

20231002:14:34:15:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Greenplum Catalog Version: '302307241'

20231002:14:34:15:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Starting Coordinator instance in admin mode

20231002:14:34:15:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-CoordinatorStart pg_ctl cmd is env GPSESSID=0000000000 GPERA=None $GPHOME/bin/pg_ctl -D /data/coordinator/gpseg-1 -l /data/coordinator/gpseg-1/log/startup.log -w -t 600 -o " -c gp_role=utility " start

20231002:14:34:15:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Obtaining Greenplum Coordinator catalog information

20231002:14:34:15:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Obtaining Segment details from coordinator...

20231002:14:34:15:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Setting new coordinator era

20231002:14:34:15:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Coordinator Started...

20231002:14:34:15:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Shutting down coordinator

20231002:14:34:18:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Commencing parallel segment instance startup, please wait...

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Process results...

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-----------------------------------------------------

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:- Successful segment starts = 2

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:- Failed segment starts = 0

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:- Skipped segment starts (segments are marked down in configuration) = 0

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-----------------------------------------------------

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Successfully started 2 of 2 segment instances

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-----------------------------------------------------

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Starting Coordinator instance gp-coordinator directory /data/coordinator/gpseg-1

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-CoordinatorStart pg_ctl cmd is env GPSESSID=0000000000 GPERA=ab03d3b04e6ea60f_231002143415 $GPHOME/bin/pg_ctl -D /data/coordinator/gpseg-1 -l /data/coordinator/gpseg-1/log/startup.log -w -t 600 -o " -c gp_role=dispatch " start

20231002:14:34:20:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Command pg_ctl reports Coordinator gp-coordinator instance active

20231002:14:34:20:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Connecting to db template1 on host localhost

20231002:14:34:20:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-No standby coordinator configured. skipping...

20231002:14:34:20:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Database successfully started

20231002:14:34:20:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Completed restart of Greenplum instance in production mode

20231002:14:34:20:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Creating core GPDB extensions

20231002:14:34:21:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Importing system collations

20231002:14:34:28:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Commencing parallel build of mirror segment instances

20231002:14:34:28:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Spawning parallel processes batch [1], please wait...

..

20231002:14:34:28:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Waiting for parallel processes batch [1], please wait...

......

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:------------------------------------------------

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Parallel process exit status

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:------------------------------------------------

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Total processes marked as completed = 2

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Total processes marked as killed = 0

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Total processes marked as failed = 0

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:------------------------------------------------

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Scanning utility log file for any warning messages

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Log file scan check passed

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Greenplum Database instance successfully created

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-------------------------------------------------------

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-To complete the environment configuration, please

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-update gpadmin .bashrc file with the following

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-1. Ensure that the greenplum_path.sh file is sourced

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-2. Add "export COORDINATOR_DATA_DIRECTORY=/data/coordinator/gpseg-1"

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:- to access the Greenplum scripts for this instance:

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:- or, use -d /data/coordinator/gpseg-1 option for the Greenplum scripts

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:- Example gpstate -d /data/coordinator/gpseg-1

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Script log file = /home/gpadmin/gpAdminLogs/gpinitsystem_20231002.log

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-To remove instance, run gpdeletesystem utility

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-To initialize a Standby Coordinator Segment for this Greenplum instance

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Review options for gpinitstandby

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-------------------------------------------------------

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-The Coordinator /data/coordinator/gpseg-1/pg_hba.conf post gpinitsystem

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-has been configured to allow all hosts within this new

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-array to intercommunicate. Any hosts external to this

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-new array must be explicitly added to this file

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Refer to the Greenplum Admin support guide which is

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-located in the /usr/local/greenplum-db-7.0.0/docs directory

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-------------------------------------------------------

```

`Greenplum Database instance successfully created` と出力されたのでセットアップが成功したように見えます。

`.barshrc`に次の環境変数を追加します。

```

cat < /dev/null

export COORDINATOR_DATA_DIRECTORY=/data/coordinator/gpseg-1

export PGPORT=5432

export PGUSER=gpadmin

export PGDATABASE=gpadmin

export LD_PRELOAD=/lib64/libz.so.1 ps

EOF

source /home/gpadmin/.bashrc

```

### データベースの作成

データベースの作成はPostgreSQLと基本的に同じです。以下の作業は `gp-coordinator` 上でのみで行います。引き続き `gpadmin` ユーザーで作業します。

```bash

createdb test

```

### VMware Greenplum®へのアクセス

VMware Greenplum®へのアクセスもPostgreSQLと基本的に同じです。以下の作業は `gp-coordinator` 上でのみで行います。引き続き `gpadmin` ユーザーで作業します。

```bash

$ psql -d test

psql (12.12)

Type "help" for help.

```

次のSQLを実行します。

```sql

CREATE TABLE IF NOT EXISTS organization

(

organization_id BIGINT PRIMARY KEY,

organization_name VARCHAR(255) NOT NULL

);

INSERT INTO organization(organization_id, organization_name) VALUES(1, 'foo');

INSERT INTO organization(organization_id, organization_name) VALUES(2, 'bar');

```

デフォルではPrimary Keyでデータが分散されるようです。`gp_segment_id`というカラムでデータがどのセグメントに配置されているか確認することができます。

```sql

test=# select organization_id,organization_name,gp_segment_id from organization;

organization_id | organization_name | gp_segment_id

-----------------+-------------------+---------------

2 | bar | 0

1 | foo | 1

(2 rows)

```

とりあえず、ここまで確認できました。

### Extensionの有効化

https://docs.vmware.com/en/VMware-Greenplum/7/greenplum-database/install_guide-install_modules.html

いくつかのExtensionを有効化します。

#### uuid-ossp

https://docs.vmware.com/en/VMware-Greenplum/7/greenplum-database/ref_guide-modules-uuid-ossp.html

```

test=# CREATE EXTENSION "uuid-ossp";

CREATE EXTENSION

test=# select uuid_generate_v4();

uuid_generate_v4

--------------------------------------

230d1dd3-aca8-4140-b2fe-83b32cacf954

(1 row)

```

#### pgvector

https://docs.vmware.com/en/VMware-Greenplum/7/greenplum-database/ref_guide-modules-pgvector-pgvector.html

```sql

test=# CREATE EXTENSION vector;

CREATE EXTENSION

```

```sql

CREATE TABLE items (id bigserial PRIMARY KEY, embedding vector(3));

INSERT INTO items (embedding) VALUES ('[1,2,3]'), ('[4,5,6]');

SELECT * FROM items ORDER BY embedding <-> '[3,1,2]' LIMIT 5;

```

```

id | embedding

----+-----------

1 | [1,2,3]

2 | [4,5,6]

(2 rows)

```

#### postgresml

https://docs.vmware.com/en/VMware-Greenplum/7/greenplum-database/ref_guide-modules-postgresml.html

gppkgファイルは`pivnet`コマンドでrpmとともにダウンロード済みです。

```

sudo cp /root/Downloads/DataSciencePython3.9-2.0.0-gp7-el8_x86_64.gppkg ./

gppkg install -a DataSciencePython3.9-2.0.0-gp7-el8_x86_64.gppkg

```

```

createdb gpadmin

gpconfig -c shared_preload_libraries -v 'pgml'

```

Greenplumを再起動します。

```

gpstop -r -a

```

```

gpconfig -c pgml.venv -v '$GPHOME/ext/DataSciencePython3.9'

```

> 次のエラーが出る場合は、`gpstop -r`が漏れています。

>

> ```

> gpconfig:gp-coordinator:gpadmin-[CRITICAL]:-not a valid GUC: pgml.venv

> not a valid GUC: pgml.venv

> ```

設定をリロードします。

```

gpstop -u

```

```sql

test=# CREATE EXTENSION pgml;

INFO: Python version: 3.9.16 (main, Jul 3 2023, 20:07:32)

[GCC 8.5.0 20210514 (Red Hat 8.5.0-18)]

INFO: Scikit-learn 1.1.2, XGBoost 1.6.2, LightGBM 4.0.0, NumPy 1.22.1

CREATE EXTENSION

```

> 次のエラーが出る場合は、`gpstop -u`が漏れています。

>

> ```

> test=# CREATE EXTENSION IF NOT EXISTS pgml;

> INFO: Python version: 3.9.16 (main, Jul 3 2023, 20:07:32)

> [GCC 8.5.0 20210514 (Red Hat 8.5.0-18)]

> ERROR: The xgboost package is missing. Install it with `sudo pip3 install xgboost`

> ModuleNotFoundError: No module named 'xgboost' (api.rs:36)

> ```

PostgresMLのtransformを試します。

```sql

SELECT pgml.transform(

'question-answering',

inputs => ARRAY[

'{

"question": "What does the customer want?",

"context": "Dear Amazon, last week I ordered an Optimus Prime action figure from your online store in Germany. Unfortunately, when I opened the package, I discovered to my horror that I had been sent an action figure of Megatron instead! As a lifelong enemy of the Decepticons, I hope you can understand my dilemma. To resolve the issue, I demand an exchange of Megatron for the Optimus Prime figure I ordered. Enclosed are copies of my records concerning this purchase. I expect to hear from you soon. Sincerely, Bumblebee."}'

]

) AS answer;

```

初回はモデルのロードに時間がかかりますが、2回目以降は速いです。

```

answer

----------------------------------------------------------------------------------------------

{"end": 358, "score": 0.6312912106513977, "start": 335, "answer": "an exchange of Megatron"}

(1 row)

```

### ユーザー追加

以下のSQLを実行して、`postgresml`ユーザーと`postgresml`データベースを作ります。手順はPostgreSQLと同じです。

```

psql -c "CREATE ROLE postgresml PASSWORD 'postgresml' SUPERUSER LOGIN"

createdb postgresml --owner postgresml

psql -c 'ALTER ROLE postgresml SET search_path TO public,pgml'

```

外からアクセスできるように`gp-coordinator`上で、次の設定ファイルを変更し、Greenplumを再起動します。

```

cat < /dev/null

host postgresml postgresml 0.0.0.0/0 md5

EOF

gpstop -r -a

```

`gp-coordinator`の外から`gp-coordinator`のIPにアクセスします。

```

$ PGPASSWORD=postgresml psql -U postgresml -d postgresml -h 10.220.46.50

psql (15.3, server 12.12)

Type "help" for help.

postgresml=#

```

以下のSQLを実行して、結果が変えればOKです。

```sql

CREATE EXTENSION IF NOT EXISTS pgml;

SELECT pgml.transform(

'question-answering',

inputs => ARRAY[

'{

"question": "What does the customer want?",

"context": "Dear Amazon, last week I ordered an Optimus Prime action figure from your online store in Germany. Unfortunately, when I opened the package, I discovered to my horror that I had been sent an action figure of Megatron instead! As a lifelong enemy of the Decepticons, I hope you can understand my dilemma. To resolve the issue, I demand an exchange of Megatron for the Optimus Prime figure I ordered. Enclosed are copies of my records concerning this purchase. I expect to hear from you soon. Sincerely, Bumblebee."}'

]

) AS answer;

```

---

並列分散処理が得意なGreenplumでvgvectorやPostgresMLが利用できることで、[Retrieval Augmented Generation (RAG)](https://ai.meta.com/blog/retrieval-augmented-generation-streamlining-the-creation-of-intelligent-natural-language-processing-models/)パターンに大きく活用できそうです。

次のコマンドを実行し、hostnameを変更します。

```

hostnamectl set-hostname gp-segment1

```

また、`ip addr`コマンドでIPアドレスを確認します。

同様に`gp-segment2`のWebコンソールを開き、次のコマンドを実行し、hostnameを変更します。

```

hostnamectl set-hostname gp-segment2

```

また、`ip addr`コマンドでIPアドレスを確認します。

`gp-coordinator`、`gp-segment1`、`gp-segment2`それぞれで次のコマンドを実行し、`/etc/hosts`ファイルにそれぞれのIPアドレスを記述します。IPアドレスは自分の環境に合わせて変更してください。

```

cat < /dev/null

10.220.46.50 gp-coordinator

10.220.46.55 gp-segment1

10.220.46.56 gp-segment2

127.0.0.1 localhost

EOF

```

この記事ではDHCPのまま作業しますが、必要に応じてstatic ipを設定してください。

### SSHの設定

各ノード間をパスワードレスでssh通信できるようにする必要があります。

まずは`gp-coordinator`、`gp-segment1`、`gp-segment2`それぞれで次のコマンドを実行して鍵を生成します。

```bash

sudo su - gpadmin bash -c 'ssh-keygen -m PEM -t rsa -b 4096 -q -N "" -f /home/gpadmin/.ssh/id_rsa'

```

以下の作業は `gp-coordinator` 上でのみで行います。`gpadmin` ユーザーで作業します。

`gp-coordinator` の公開鍵を各ホストの `/home/gpadmin/.ssh/authorized_keys` に追記します。

```bash

sudo su - gpadmin

SSHPASS=Greenplum123 sshpass -e ssh-copy-id -o StrictHostKeyChecking=no gp-coordinator

SSHPASS=Greenplum123 sshpass -e ssh-copy-id -o StrictHostKeyChecking=no gp-segment1

SSHPASS=Greenplum123 sshpass -e ssh-copy-id -o StrictHostKeyChecking=no gp-segment2

```

次のコマンドで各ホストの公開鍵を各ホストの `/home/gpadmin/.ssh/known_hosts` に追記します。

```bash

cat < hostfile_exkeys

gp-coordinator

gp-segment1

gp-segment2

EOF

gpssh-exkeys -f hostfile_exkeys

```

### VMware Greenplum®のセットアップ

以下の作業は `gp-coordinator` 上でのみで行います。引き続き `gpadmin` ユーザーで作業します。

次のコマンドで各ホスト用のディレクトリの作成をします。

```bash

sudo mkdir -p /data/coordinator

sudo chown gpadmin:gpadmin /data/coordinator

cat < hostfile_gpssh_segonly

gp-segment1

gp-segment2

EOF

gpssh -f hostfile_gpssh_segonly -e 'sudo mkdir -p /data/primary'

gpssh -f hostfile_gpssh_segonly -e 'sudo mkdir -p /data/mirror'

gpssh -f hostfile_gpssh_segonly -e 'sudo chown -R gpadmin /data/*'

```

次のコマンドでVMware Greenplum®を初期化します。

```bash

mkdir -p gpconfigs

cat < gpconfigs/hostfile_gpinitsystem

gp-segment1

gp-segment2

EOF

cp $GPHOME/docs/cli_help/gpconfigs/gpinitsystem_config /home/gpadmin/gpconfigs/gpinitsystem_config

sed -i.bak \

-e 's|/data1/primary /data1/primary /data1/primary /data2/primary /data2/primary /data2/primary|/data/primary|' \

-e 's|/data1/mirror /data1/mirror /data1/mirror /data2/mirror /data2/mirror /data2/mirror|/data/mirror|' \

-e 's/#MIRROR_PORT_BASE/MIRROR_PORT_BASE/' \

-e 's/#declare -a MIRROR_DATA_DIRECTORY/declare -a MIRROR_DATA_DIRECTORY/' \

-e 's/COORDINATOR_HOSTNAME=cdw/COORDINATOR_HOSTNAME=gp-coordinator/' \

gpconfigs/gpinitsystem_config

gpinitsystem -c gpconfigs/gpinitsystem_config -h gpconfigs/hostfile_gpinitsystem

```

次のようなログが出力され、確認が求められます。

```

20231002:14:33:40:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Checking configuration parameters, please wait...

20231002:14:33:40:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Reading Greenplum configuration file gpconfigs/gpinitsystem_config

20231002:14:33:40:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Locale has not been set in gpconfigs/gpinitsystem_config, will set to default value

20231002:14:33:40:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-No DATABASE_NAME set, will exit following template1 updates

20231002:14:33:40:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-COORDINATOR_MAX_CONNECT not set, will set to default value 250

20231002:14:33:40:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Checking configuration parameters, Completed

20231002:14:33:40:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Commencing multi-home checks, please wait...

..

20231002:14:33:41:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Configuring build for standard array

20231002:14:33:41:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Commencing multi-home checks, Completed

20231002:14:33:41:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Building primary segment instance array, please wait...

..

20231002:14:33:43:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Building group mirror array type , please wait...

..

20231002:14:33:45:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Checking Coordinator host

20231002:14:33:45:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Checking new segment hosts, please wait...

....

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Checking new segment hosts, Completed

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Greenplum Database Creation Parameters

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:---------------------------------------

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Coordinator Configuration

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:---------------------------------------

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Coordinator hostname = gp-coordinator

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Coordinator port = 5432

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Coordinator instance dir = /data/coordinator/gpseg-1

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Coordinator LOCALE =

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Greenplum segment prefix = gpseg

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Coordinator Database =

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Coordinator connections = 250

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Coordinator buffers = 128000kB

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Segment connections = 750

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Segment buffers = 128000kB

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Encoding = UNICODE

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Postgres param file = Off

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Initdb to be used = /usr/local/greenplum-db-7.0.0/bin/initdb

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-GP_LIBRARY_PATH is = /usr/local/greenplum-db-7.0.0/lib

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-HEAP_CHECKSUM is = on

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-HBA_HOSTNAMES is = 0

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Ulimit check = Passed

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Array host connect type = Single hostname per node

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Coordinator IP address [1] = ::1

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Coordinator IP address [2] = 10.220.46.50

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Coordinator IP address [3] = fe80::250:56ff:feb3:718

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Standby Coordinator = Not Configured

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Number of primary segments = 1

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Total Database segments = 2

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Trusted shell = ssh

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Number segment hosts = 2

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Mirror port base = 7000

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Number of mirror segments = 1

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Mirroring config = ON

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Mirroring type = Group

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:----------------------------------------

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Greenplum Primary Segment Configuration

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:----------------------------------------

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-gp-segment1 6000 gp-segment1 /data/primary/gpseg0 2

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-gp-segment2 6000 gp-segment2 /data/primary/gpseg1 3

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:---------------------------------------

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Greenplum Mirror Segment Configuration

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:---------------------------------------

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-gp-segment2 7000 gp-segment2 /data/mirror/gpseg0 4

20231002:14:33:52:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-gp-segment1 7000 gp-segment1 /data/mirror/gpseg1 5

Continue with Greenplum creation Yy|Nn (default=N):

>

```

`y`を入力すると、次のようなログが出力されます。

```

20231002:14:33:54:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Building the Coordinator instance database, please wait...

20231002:14:33:57:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Starting the Coordinator in admin mode

20231002:14:33:57:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Commencing parallel build of primary segment instances

20231002:14:33:57:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Spawning parallel processes batch [1], please wait...

..

20231002:14:33:58:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Waiting for parallel processes batch [1], please wait...

............

20231002:14:34:10:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:------------------------------------------------

20231002:14:34:10:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Parallel process exit status

20231002:14:34:10:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:------------------------------------------------

20231002:14:34:10:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Total processes marked as completed = 2

20231002:14:34:10:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Total processes marked as killed = 0

20231002:14:34:10:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Total processes marked as failed = 0

20231002:14:34:10:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:------------------------------------------------

20231002:14:34:10:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Removing back out file

20231002:14:34:10:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-No errors generated from parallel processes

20231002:14:34:10:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Restarting the Greenplum instance in production mode

20231002:14:34:10:005447 gpstop:gp-coordinator:gpadmin-[INFO]:-Starting gpstop with args: -a -l /home/gpadmin/gpAdminLogs -m -d /data/coordinator/gpseg-1

20231002:14:34:10:005447 gpstop:gp-coordinator:gpadmin-[INFO]:-Gathering information and validating the environment...

20231002:14:34:10:005447 gpstop:gp-coordinator:gpadmin-[INFO]:-Obtaining Greenplum Coordinator catalog information

20231002:14:34:10:005447 gpstop:gp-coordinator:gpadmin-[INFO]:-Obtaining Segment details from coordinator...

20231002:14:34:10:005447 gpstop:gp-coordinator:gpadmin-[INFO]:-Greenplum Version: 'postgres (Greenplum Database) 7.0.0 build commit:0a7a3566873325aca1789ae6f818c80f17a9402d'

20231002:14:34:10:005447 gpstop:gp-coordinator:gpadmin-[INFO]:-Commencing Coordinator instance shutdown with mode='smart'

20231002:14:34:10:005447 gpstop:gp-coordinator:gpadmin-[INFO]:-Coordinator segment instance directory=/data/coordinator/gpseg-1

20231002:14:34:11:005447 gpstop:gp-coordinator:gpadmin-[INFO]:-Stopping coordinator segment and waiting for user connections to finish ...

server shutting down

20231002:14:34:12:005447 gpstop:gp-coordinator:gpadmin-[INFO]:-Attempting forceful termination of any leftover coordinator process

20231002:14:34:12:005447 gpstop:gp-coordinator:gpadmin-[INFO]:-Terminating processes for segment /data/coordinator/gpseg-1

20231002:14:34:15:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Starting gpstart with args: -a -l /home/gpadmin/gpAdminLogs -d /data/coordinator/gpseg-1

20231002:14:34:15:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Gathering information and validating the environment...

20231002:14:34:15:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Greenplum Binary Version: 'postgres (Greenplum Database) 7.0.0 build commit:0a7a3566873325aca1789ae6f818c80f17a9402d'

20231002:14:34:15:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Greenplum Catalog Version: '302307241'

20231002:14:34:15:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Starting Coordinator instance in admin mode

20231002:14:34:15:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-CoordinatorStart pg_ctl cmd is env GPSESSID=0000000000 GPERA=None $GPHOME/bin/pg_ctl -D /data/coordinator/gpseg-1 -l /data/coordinator/gpseg-1/log/startup.log -w -t 600 -o " -c gp_role=utility " start

20231002:14:34:15:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Obtaining Greenplum Coordinator catalog information

20231002:14:34:15:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Obtaining Segment details from coordinator...

20231002:14:34:15:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Setting new coordinator era

20231002:14:34:15:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Coordinator Started...

20231002:14:34:15:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Shutting down coordinator

20231002:14:34:18:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Commencing parallel segment instance startup, please wait...

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Process results...

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-----------------------------------------------------

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:- Successful segment starts = 2

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:- Failed segment starts = 0

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:- Skipped segment starts (segments are marked down in configuration) = 0

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-----------------------------------------------------

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Successfully started 2 of 2 segment instances

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-----------------------------------------------------

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Starting Coordinator instance gp-coordinator directory /data/coordinator/gpseg-1

20231002:14:34:19:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-CoordinatorStart pg_ctl cmd is env GPSESSID=0000000000 GPERA=ab03d3b04e6ea60f_231002143415 $GPHOME/bin/pg_ctl -D /data/coordinator/gpseg-1 -l /data/coordinator/gpseg-1/log/startup.log -w -t 600 -o " -c gp_role=dispatch " start

20231002:14:34:20:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Command pg_ctl reports Coordinator gp-coordinator instance active

20231002:14:34:20:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Connecting to db template1 on host localhost

20231002:14:34:20:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-No standby coordinator configured. skipping...

20231002:14:34:20:005858 gpstart:gp-coordinator:gpadmin-[INFO]:-Database successfully started

20231002:14:34:20:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Completed restart of Greenplum instance in production mode

20231002:14:34:20:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Creating core GPDB extensions

20231002:14:34:21:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Importing system collations

20231002:14:34:28:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Commencing parallel build of mirror segment instances

20231002:14:34:28:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Spawning parallel processes batch [1], please wait...

..

20231002:14:34:28:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Waiting for parallel processes batch [1], please wait...

......

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:------------------------------------------------

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Parallel process exit status

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:------------------------------------------------

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Total processes marked as completed = 2

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Total processes marked as killed = 0

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Total processes marked as failed = 0

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:------------------------------------------------

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Scanning utility log file for any warning messages

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Log file scan check passed

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Greenplum Database instance successfully created

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-------------------------------------------------------

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-To complete the environment configuration, please

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-update gpadmin .bashrc file with the following

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-1. Ensure that the greenplum_path.sh file is sourced

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-2. Add "export COORDINATOR_DATA_DIRECTORY=/data/coordinator/gpseg-1"

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:- to access the Greenplum scripts for this instance:

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:- or, use -d /data/coordinator/gpseg-1 option for the Greenplum scripts

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:- Example gpstate -d /data/coordinator/gpseg-1

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Script log file = /home/gpadmin/gpAdminLogs/gpinitsystem_20231002.log

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-To remove instance, run gpdeletesystem utility

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-To initialize a Standby Coordinator Segment for this Greenplum instance

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Review options for gpinitstandby

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-------------------------------------------------------

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-The Coordinator /data/coordinator/gpseg-1/pg_hba.conf post gpinitsystem

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-has been configured to allow all hosts within this new

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-array to intercommunicate. Any hosts external to this

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-new array must be explicitly added to this file

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-Refer to the Greenplum Admin support guide which is

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-located in the /usr/local/greenplum-db-7.0.0/docs directory

20231002:14:34:34:002300 gpinitsystem:gp-coordinator:gpadmin-[INFO]:-------------------------------------------------------

```

`Greenplum Database instance successfully created` と出力されたのでセットアップが成功したように見えます。

`.barshrc`に次の環境変数を追加します。

```

cat < /dev/null

export COORDINATOR_DATA_DIRECTORY=/data/coordinator/gpseg-1

export PGPORT=5432

export PGUSER=gpadmin

export PGDATABASE=gpadmin

export LD_PRELOAD=/lib64/libz.so.1 ps

EOF

source /home/gpadmin/.bashrc

```

### データベースの作成

データベースの作成はPostgreSQLと基本的に同じです。以下の作業は `gp-coordinator` 上でのみで行います。引き続き `gpadmin` ユーザーで作業します。

```bash

createdb test

```

### VMware Greenplum®へのアクセス

VMware Greenplum®へのアクセスもPostgreSQLと基本的に同じです。以下の作業は `gp-coordinator` 上でのみで行います。引き続き `gpadmin` ユーザーで作業します。

```bash

$ psql -d test

psql (12.12)

Type "help" for help.

```

次のSQLを実行します。

```sql

CREATE TABLE IF NOT EXISTS organization

(

organization_id BIGINT PRIMARY KEY,

organization_name VARCHAR(255) NOT NULL

);

INSERT INTO organization(organization_id, organization_name) VALUES(1, 'foo');

INSERT INTO organization(organization_id, organization_name) VALUES(2, 'bar');

```

デフォルではPrimary Keyでデータが分散されるようです。`gp_segment_id`というカラムでデータがどのセグメントに配置されているか確認することができます。

```sql

test=# select organization_id,organization_name,gp_segment_id from organization;

organization_id | organization_name | gp_segment_id

-----------------+-------------------+---------------

2 | bar | 0

1 | foo | 1

(2 rows)

```

とりあえず、ここまで確認できました。

### Extensionの有効化

https://docs.vmware.com/en/VMware-Greenplum/7/greenplum-database/install_guide-install_modules.html

いくつかのExtensionを有効化します。

#### uuid-ossp

https://docs.vmware.com/en/VMware-Greenplum/7/greenplum-database/ref_guide-modules-uuid-ossp.html

```

test=# CREATE EXTENSION "uuid-ossp";

CREATE EXTENSION

test=# select uuid_generate_v4();

uuid_generate_v4

--------------------------------------

230d1dd3-aca8-4140-b2fe-83b32cacf954

(1 row)

```

#### pgvector

https://docs.vmware.com/en/VMware-Greenplum/7/greenplum-database/ref_guide-modules-pgvector-pgvector.html

```sql

test=# CREATE EXTENSION vector;

CREATE EXTENSION

```

```sql

CREATE TABLE items (id bigserial PRIMARY KEY, embedding vector(3));

INSERT INTO items (embedding) VALUES ('[1,2,3]'), ('[4,5,6]');

SELECT * FROM items ORDER BY embedding <-> '[3,1,2]' LIMIT 5;

```

```

id | embedding

----+-----------

1 | [1,2,3]

2 | [4,5,6]

(2 rows)

```

#### postgresml

https://docs.vmware.com/en/VMware-Greenplum/7/greenplum-database/ref_guide-modules-postgresml.html

gppkgファイルは`pivnet`コマンドでrpmとともにダウンロード済みです。

```

sudo cp /root/Downloads/DataSciencePython3.9-2.0.0-gp7-el8_x86_64.gppkg ./

gppkg install -a DataSciencePython3.9-2.0.0-gp7-el8_x86_64.gppkg

```

```

createdb gpadmin

gpconfig -c shared_preload_libraries -v 'pgml'

```

Greenplumを再起動します。

```

gpstop -r -a

```

```

gpconfig -c pgml.venv -v '$GPHOME/ext/DataSciencePython3.9'

```

> 次のエラーが出る場合は、`gpstop -r`が漏れています。

>

> ```

> gpconfig:gp-coordinator:gpadmin-[CRITICAL]:-not a valid GUC: pgml.venv

> not a valid GUC: pgml.venv

> ```

設定をリロードします。

```

gpstop -u

```

```sql

test=# CREATE EXTENSION pgml;

INFO: Python version: 3.9.16 (main, Jul 3 2023, 20:07:32)

[GCC 8.5.0 20210514 (Red Hat 8.5.0-18)]

INFO: Scikit-learn 1.1.2, XGBoost 1.6.2, LightGBM 4.0.0, NumPy 1.22.1

CREATE EXTENSION

```

> 次のエラーが出る場合は、`gpstop -u`が漏れています。

>

> ```

> test=# CREATE EXTENSION IF NOT EXISTS pgml;

> INFO: Python version: 3.9.16 (main, Jul 3 2023, 20:07:32)

> [GCC 8.5.0 20210514 (Red Hat 8.5.0-18)]

> ERROR: The xgboost package is missing. Install it with `sudo pip3 install xgboost`

> ModuleNotFoundError: No module named 'xgboost' (api.rs:36)

> ```

PostgresMLのtransformを試します。

```sql

SELECT pgml.transform(

'question-answering',

inputs => ARRAY[

'{

"question": "What does the customer want?",

"context": "Dear Amazon, last week I ordered an Optimus Prime action figure from your online store in Germany. Unfortunately, when I opened the package, I discovered to my horror that I had been sent an action figure of Megatron instead! As a lifelong enemy of the Decepticons, I hope you can understand my dilemma. To resolve the issue, I demand an exchange of Megatron for the Optimus Prime figure I ordered. Enclosed are copies of my records concerning this purchase. I expect to hear from you soon. Sincerely, Bumblebee."}'

]

) AS answer;

```

初回はモデルのロードに時間がかかりますが、2回目以降は速いです。

```

answer

----------------------------------------------------------------------------------------------

{"end": 358, "score": 0.6312912106513977, "start": 335, "answer": "an exchange of Megatron"}

(1 row)

```

### ユーザー追加

以下のSQLを実行して、`postgresml`ユーザーと`postgresml`データベースを作ります。手順はPostgreSQLと同じです。

```

psql -c "CREATE ROLE postgresml PASSWORD 'postgresml' SUPERUSER LOGIN"

createdb postgresml --owner postgresml

psql -c 'ALTER ROLE postgresml SET search_path TO public,pgml'

```

外からアクセスできるように`gp-coordinator`上で、次の設定ファイルを変更し、Greenplumを再起動します。

```

cat < /dev/null

host postgresml postgresml 0.0.0.0/0 md5

EOF

gpstop -r -a

```

`gp-coordinator`の外から`gp-coordinator`のIPにアクセスします。

```

$ PGPASSWORD=postgresml psql -U postgresml -d postgresml -h 10.220.46.50

psql (15.3, server 12.12)

Type "help" for help.

postgresml=#

```

以下のSQLを実行して、結果が変えればOKです。

```sql

CREATE EXTENSION IF NOT EXISTS pgml;

SELECT pgml.transform(

'question-answering',

inputs => ARRAY[

'{

"question": "What does the customer want?",

"context": "Dear Amazon, last week I ordered an Optimus Prime action figure from your online store in Germany. Unfortunately, when I opened the package, I discovered to my horror that I had been sent an action figure of Megatron instead! As a lifelong enemy of the Decepticons, I hope you can understand my dilemma. To resolve the issue, I demand an exchange of Megatron for the Optimus Prime figure I ordered. Enclosed are copies of my records concerning this purchase. I expect to hear from you soon. Sincerely, Bumblebee."}'

]

) AS answer;

```

---

並列分散処理が得意なGreenplumでvgvectorやPostgresMLが利用できることで、[Retrieval Augmented Generation (RAG)](https://ai.meta.com/blog/retrieval-augmented-generation-streamlining-the-creation-of-intelligent-natural-language-processing-models/)パターンに大きく活用できそうです。