Table of contents

- CLIのインストール

- vCenterにOVAファイルをアップロード

- SSH Keyの作成

- Management ClusterをvSphereに作成

- Workload Clusterを作成

- MetalLBのインストール

- Persistent Volumeの確認

- Workload Clusterのスケールアウト

- Workload Clusterを削除

- Management Clusterの削除

CLIのインストール

から

- VMware Tanzu Kubernetes Grid CLI for Mac

をダウンロードして次のようにインストールしてください。

tar xzvf tkg-darwin-amd64-v1.2.0-vmware.1.tar.gz

mv tkg/tkg-darwin-amd64-v1.2.0+vmware.1 /usr/local/bin/tkg

chmod +x /usr/local/bin/tkg

その他、Dockerが必要です。

次のバージョンで動作確認しています。

$ tkg version

Client:

Version: v1.2.0

Git commit: 05b233e75d6e40659247a67750b3e998c2d990a5

次のコマンドを実行すると設定ファイル用のディレクトリが生成されます。

tkg get management-cluster

次のようなディレクトリが作成します。

$ find ~/.tkg

/Users/toshiaki/.tkg

/Users/toshiaki/.tkg/bom

/Users/toshiaki/.tkg/bom/bom-1.1.2+vmware.1.yaml

/Users/toshiaki/.tkg/bom/bom-1.18.8+vmware.1.yaml

/Users/toshiaki/.tkg/bom/bom-tkg-1.0.0.yaml

/Users/toshiaki/.tkg/bom/bom-1.2.0+vmware.1.yaml

/Users/toshiaki/.tkg/bom/bom-1.17.6+vmware.1.yaml

/Users/toshiaki/.tkg/bom/bom-1.17.11+vmware.1.yaml

/Users/toshiaki/.tkg/bom/bom-1.1.0+vmware.1.yaml

/Users/toshiaki/.tkg/bom/bom-1.17.9+vmware.1.yaml

/Users/toshiaki/.tkg/bom/bom-1.1.3+vmware.1.yaml

/Users/toshiaki/.tkg/providers

/Users/toshiaki/.tkg/providers/infrastructure-azure

/Users/toshiaki/.tkg/providers/infrastructure-azure/v0.4.8

/Users/toshiaki/.tkg/providers/infrastructure-azure/v0.4.8/infrastructure-components.yaml

/Users/toshiaki/.tkg/providers/infrastructure-azure/v0.4.8/cluster-template-definition-prod.yaml

/Users/toshiaki/.tkg/providers/infrastructure-azure/v0.4.8/cluster-template-definition-dev.yaml

/Users/toshiaki/.tkg/providers/infrastructure-azure/v0.4.8/ytt

/Users/toshiaki/.tkg/providers/infrastructure-azure/v0.4.8/ytt/overlay.yaml

/Users/toshiaki/.tkg/providers/infrastructure-azure/v0.4.8/ytt/base-template.yaml

/Users/toshiaki/.tkg/providers/infrastructure-azure/ytt

/Users/toshiaki/.tkg/providers/infrastructure-azure/ytt/azure-overlay.yaml

/Users/toshiaki/.tkg/providers/control-plane-kubeadm

/Users/toshiaki/.tkg/providers/control-plane-kubeadm/v0.3.10

/Users/toshiaki/.tkg/providers/control-plane-kubeadm/v0.3.10/control-plane-components.yaml

/Users/toshiaki/.tkg/providers/providers.md5sum

/Users/toshiaki/.tkg/providers/infrastructure-vsphere

/Users/toshiaki/.tkg/providers/infrastructure-vsphere/v0.7.1

/Users/toshiaki/.tkg/providers/infrastructure-vsphere/v0.7.1/infrastructure-components.yaml

/Users/toshiaki/.tkg/providers/infrastructure-vsphere/v0.7.1/cluster-template-definition-prod.yaml

/Users/toshiaki/.tkg/providers/infrastructure-vsphere/v0.7.1/cluster-template-definition-dev.yaml

/Users/toshiaki/.tkg/providers/infrastructure-vsphere/v0.7.1/ytt

/Users/toshiaki/.tkg/providers/infrastructure-vsphere/v0.7.1/ytt/overlay.yaml

/Users/toshiaki/.tkg/providers/infrastructure-vsphere/v0.7.1/ytt/add_csi.yaml

/Users/toshiaki/.tkg/providers/infrastructure-vsphere/v0.7.1/ytt/csi-vsphere.lib.txt

/Users/toshiaki/.tkg/providers/infrastructure-vsphere/v0.7.1/ytt/csi.lib.yaml

/Users/toshiaki/.tkg/providers/infrastructure-vsphere/v0.7.1/ytt/base-template.yaml

/Users/toshiaki/.tkg/providers/infrastructure-vsphere/ytt

/Users/toshiaki/.tkg/providers/infrastructure-vsphere/ytt/vsphere-overlay.yaml

/Users/toshiaki/.tkg/providers/cluster-api

/Users/toshiaki/.tkg/providers/cluster-api/v0.3.10

/Users/toshiaki/.tkg/providers/cluster-api/v0.3.10/core-components.yaml

/Users/toshiaki/.tkg/providers/config.yaml

/Users/toshiaki/.tkg/providers/bootstrap-kubeadm

/Users/toshiaki/.tkg/providers/bootstrap-kubeadm/v0.3.10

/Users/toshiaki/.tkg/providers/bootstrap-kubeadm/v0.3.10/bootstrap-components.yaml

/Users/toshiaki/.tkg/providers/infrastructure-aws

/Users/toshiaki/.tkg/providers/infrastructure-aws/v0.5.5

/Users/toshiaki/.tkg/providers/infrastructure-aws/v0.5.5/infrastructure-components.yaml

/Users/toshiaki/.tkg/providers/infrastructure-aws/v0.5.5/cluster-template-definition-prod.yaml

/Users/toshiaki/.tkg/providers/infrastructure-aws/v0.5.5/cluster-template-definition-dev.yaml

/Users/toshiaki/.tkg/providers/infrastructure-aws/v0.5.5/ytt

/Users/toshiaki/.tkg/providers/infrastructure-aws/v0.5.5/ytt/overlay.yaml

/Users/toshiaki/.tkg/providers/infrastructure-aws/v0.5.5/ytt/base-template.yaml

/Users/toshiaki/.tkg/providers/infrastructure-aws/ytt

/Users/toshiaki/.tkg/providers/infrastructure-aws/ytt/aws-overlay.yaml

/Users/toshiaki/.tkg/providers/config_default.yaml

/Users/toshiaki/.tkg/providers/infrastructure-docker

/Users/toshiaki/.tkg/providers/infrastructure-docker/v0.3.10

/Users/toshiaki/.tkg/providers/infrastructure-docker/v0.3.10/infrastructure-components.yaml

/Users/toshiaki/.tkg/providers/infrastructure-docker/v0.3.10/metadata.yaml

/Users/toshiaki/.tkg/providers/infrastructure-docker/v0.3.10/cluster-template-definition-prod.yaml

/Users/toshiaki/.tkg/providers/infrastructure-docker/v0.3.10/cluster-template-definition-dev.yaml

/Users/toshiaki/.tkg/providers/infrastructure-docker/v0.3.10/ytt

/Users/toshiaki/.tkg/providers/infrastructure-docker/v0.3.10/ytt/overlay.yaml

/Users/toshiaki/.tkg/providers/infrastructure-docker/v0.3.10/ytt/base-template.yaml

/Users/toshiaki/.tkg/providers/infrastructure-docker/ytt

/Users/toshiaki/.tkg/providers/infrastructure-docker/ytt/docker-overlay.yaml

/Users/toshiaki/.tkg/providers/infrastructure-tkg-service-vsphere

/Users/toshiaki/.tkg/providers/infrastructure-tkg-service-vsphere/v1.0.0

/Users/toshiaki/.tkg/providers/infrastructure-tkg-service-vsphere/v1.0.0/cluster-template-definition-prod.yaml

/Users/toshiaki/.tkg/providers/infrastructure-tkg-service-vsphere/v1.0.0/cluster-template-definition-dev.yaml

/Users/toshiaki/.tkg/providers/infrastructure-tkg-service-vsphere/v1.0.0/ytt

/Users/toshiaki/.tkg/providers/infrastructure-tkg-service-vsphere/v1.0.0/ytt/overlay.yaml

/Users/toshiaki/.tkg/providers/infrastructure-tkg-service-vsphere/v1.0.0/ytt/base-template.yaml

/Users/toshiaki/.tkg/providers/infrastructure-tkg-service-vsphere/ytt

/Users/toshiaki/.tkg/providers/infrastructure-tkg-service-vsphere/ytt/tkg-service-vsphere-overlay.yaml

/Users/toshiaki/.tkg/providers/ytt

/Users/toshiaki/.tkg/providers/ytt/02_addons

/Users/toshiaki/.tkg/providers/ytt/02_addons/cni

/Users/toshiaki/.tkg/providers/ytt/02_addons/cni/antrea

/Users/toshiaki/.tkg/providers/ytt/02_addons/cni/antrea/antrea.lib.yaml

/Users/toshiaki/.tkg/providers/ytt/02_addons/cni/antrea/antrea_overlay.lib.yaml

/Users/toshiaki/.tkg/providers/ytt/02_addons/cni/add_cni.yaml

/Users/toshiaki/.tkg/providers/ytt/02_addons/cni/calico

/Users/toshiaki/.tkg/providers/ytt/02_addons/cni/calico/calico_overlay.lib.yaml

/Users/toshiaki/.tkg/providers/ytt/02_addons/cni/calico/calico.lib.yaml

/Users/toshiaki/.tkg/providers/ytt/02_addons/metadata

/Users/toshiaki/.tkg/providers/ytt/02_addons/metadata/add_cluster_metadata.yaml

/Users/toshiaki/.tkg/providers/ytt/01_plans

/Users/toshiaki/.tkg/providers/ytt/01_plans/dev.yaml

/Users/toshiaki/.tkg/providers/ytt/01_plans/prod.yaml

/Users/toshiaki/.tkg/providers/ytt/01_plans/oidc.yaml

/Users/toshiaki/.tkg/providers/ytt/03_customizations

/Users/toshiaki/.tkg/providers/ytt/03_customizations/filter.yaml

/Users/toshiaki/.tkg/providers/ytt/03_customizations/remove_mhc.yaml

/Users/toshiaki/.tkg/providers/ytt/03_customizations/add_sc.yaml

/Users/toshiaki/.tkg/providers/ytt/03_customizations/registry_skip_tls_verify.yaml

/Users/toshiaki/.tkg/providers/ytt/lib

/Users/toshiaki/.tkg/providers/ytt/lib/validate.star

/Users/toshiaki/.tkg/providers/ytt/lib/helpers.star

/Users/toshiaki/.tkg/.providers.zip.lock

/Users/toshiaki/.tkg/config.yaml

/Users/toshiaki/.tkg/.boms.lock

vCenterにOVAファイルをアップロード

から

- Photon v3 Kubernetes v1.19.1 OVA

- Photon v3 Kubernetes v1.18.8 OVA

をダウンロードし、~/Downloadsに保存します。

TKGをインストールする環境の情報を次の例のように設定してください。インストール先のネットワークはDHCPが有効になっている必要があります。

cat <<EOF > tkg-env.sh

export GOVC_URL=vcsa.v.maki.lol

export GOVC_USERNAME=administrator@vsphere.local

export GOVC_PASSWORD=VMware1!

export GOVC_DATACENTER=Datacenter

export GOVC_NETWORK="VM Network"

export GOVC_DATASTORE=datastore1

export GOVC_RESOURCE_POOL=/Datacenter/host/Cluster/Resources/tkg

export GOVC_INSECURE=1

export TEMPLATE_FOLDER=/Datacenter/vm/tkg

EOF

次のコマンドでOVAファイルをvCenterにアップロードします。

source tkg-env.sh

govc pool.create ${GOVC_RESOURCE_POOL}

govc folder.create ${TEMPLATE_FOLDER}

govc import.ova -folder ${TEMPLATE_FOLDER} ~/Downloads/photon-3-kube-v1.18.8-vmware.1.ova

govc import.ova -folder ${TEMPLATE_FOLDER} ~/Downloads/photon-3-kube-v1.19.1-vmware.2.ova

govc vm.markastemplate ${TEMPLATE_FOLDER}/photon-3-kube-v1.18.8

govc vm.markastemplate ${TEMPLATE_FOLDER}/photon-3-kube-v1.19.1

SSH Keyの作成

TKGで作成されるVM用のSSH Keyを作成します。

ssh-keygen -t rsa -b 4096 -f ~/.ssh/tkg

キーフレーズは空にしてください。

Management ClusterをvSphereに作成

TKGでWorkload Clusterを作成するための、Management Clusterを作成します。

次のコマンドでvCenterの情報をManagement Cluster用の設定ファイル(~/.tkg/config.yaml)に追記します。

source tkg-env.sh

cat <<EOF >> ~/.tkg/config.yaml

VSPHERE_SERVER: ${GOVC_URL}

VSPHERE_USERNAME: ${GOVC_USERNAME}

VSPHERE_PASSWORD: ${GOVC_PASSWORD}

VSPHERE_DATACENTER: ${GOVC_DATACENTER}

VSPHERE_DATASTORE: ${GOVC_DATACENTER}/datastore/${GOVC_DATASTORE}

VSPHERE_NETWORK: ${GOVC_NETWORK}

SERVICE_CIDR: 100.64.0.0/13

CLUSTER_CIDR: 100.96.0.0/11

VSPHERE_RESOURCE_POOL: ${GOVC_RESOURCE_POOL}

VSPHERE_FOLDER: ${TEMPLATE_FOLDER}

VSPHERE_CONTROL_PLANE_NUM_CPUS: "2"

VSPHERE_CONTROL_PLANE_MEM_MIB: "2048"

VSPHERE_CONTROL_PLANE_DISK_GIB: "20"

VSPHERE_WORKER_NUM_CPUS: "2"

VSPHERE_WORKER_MEM_MIB: "4096"

VSPHERE_WORKER_DISK_GIB: "20"

VSPHERE_SSH_AUTHORIZED_KEY: $(cat ~/.ssh/tkg.pub)

EOF

次のコマンドでManagement Clusterを作成します。Control Planeの台数はdev planが1台、prodが3台になります。

tkg init --infrastructure vsphere --name tkg-sandbox --plan dev --vsphere-controlplane-endpoint-ip 192.168.56.100 --deploy-tkg-on-vSphere7 -v 6

tkg initでKindクラスタ作成、clusterctl init、clusterclt config、kubectl apply相当の処理が行われます。

次のようなログが出力されます。

Logs of the command execution can also be found at: /var/folders/76/vg4pyy253pbgwzncmx2mb1gh0000gq/T/tkg-20201018T010707991110089.log

unable to find image capdControllerImage in BOM file

Using configuration file: /Users/toshiaki/.tkg/config.yaml

Validating the pre-requisites...

vSphere 7.0 Environment Detected.

You have connected to a vSphere 7.0 environment which does not have vSphere with Tanzu enabled. vSphere with Tanzu includes

an integrated Tanzu Kubernetes Grid Service which turns a vSphere cluster into a platform for running Kubernetes workloads in dedicated

resource pools. Configuring Tanzu Kubernetes Grid Service is done through vSphere HTML5 client.

Tanzu Kubernetes Grid Service is the preferred way to consume Tanzu Kubernetes Grid in vSphere 7.0 environments. Alternatively you may

deploy a non-integrated Tanzu Kubernetes Grid instance on vSphere 7.0.

Deploying TKG management cluster on vSphere 7.0 ...

Setting up management cluster...

Validating configuration...

Using infrastructure provider vsphere:v0.7.1

Generating cluster configuration...

Fetching File="cluster-template-definition-dev.yaml" Provider="infrastructure-vsphere" Version="v0.7.1"

Setting up bootstrapper...

Fetching configuration for kind node image...

Creating kind cluster: tkg-kind-bu5hdb98d3b3v2ga8go0

...

Context set for management cluster tkg-sandbox as 'tkg-sandbox-admin@tkg-sandbox'.

Deleting kind cluster: tkg-kind-bu5hdb98d3b3v2ga8go0

Management cluster created!

You can now create your first workload cluster by running the following:

tkg create cluster [name] --kubernetes-version=[version] --plan=[plan]

vSphere上には次のVMが作成されています。

tkg get management-clusterコマンドでManagement Cluster一覧を取得できます。

$ tkg get management-cluster

MANAGEMENT-CLUSTER-NAME CONTEXT-NAME STATUS

tkg-sandbox * tkg-sandbox-admin@tkg-sandbox Success

tkg get cluster --include-management-clusterコマンドでWorkload Cluster及びManagement Cluster一覧を取得できます。

$ tkg get cluster --include-management-cluster

NAME NAMESPACE STATUS CONTROLPLANE WORKERS KUBERNETES ROLES

tkg-sandbox tkg-system running 1/1 1/1 v1.19.1+vmware.2 management

Management Clusterにkubectlコマンドでアクセスしてみます。

$ kubectl config use-context tkg-sandbox-admin@tkg-sandbox

$ kubectl cluster-info

Kubernetes master is running at https://192.168.56.100:6443

KubeDNS is running at https://192.168.56.100:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

$ kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

tkg-sandbox-control-plane-x2whc Ready master 11m v1.19.1+vmware.2 192.168.56.143 192.168.56.143 VMware Photon OS/Linux 4.19.145-2.ph3 containerd://1.3.4

tkg-sandbox-md-0-6d8f6f8665-4wqdh Ready <none> 10m v1.19.1+vmware.2 192.168.56.144 192.168.56.144 VMware Photon OS/Linux 4.19.145-2.ph3 containerd://1.3.4

$ kubectl get pod -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

capi-kubeadm-bootstrap-system capi-kubeadm-bootstrap-controller-manager-555959949-cc9gt 2/2 Running 0 8m57s 100.96.1.8 tkg-sandbox-md-0-6d8f6f8665-4wqdh <none> <none>

capi-kubeadm-control-plane-system capi-kubeadm-control-plane-controller-manager-55fccd567d-hpsjc 2/2 Running 0 8m55s 100.96.1.10 tkg-sandbox-md-0-6d8f6f8665-4wqdh <none> <none>

capi-system capi-controller-manager-dcccc544f-pvlmw 2/2 Running 0 9m 100.96.1.7 tkg-sandbox-md-0-6d8f6f8665-4wqdh <none> <none>

capi-webhook-system capi-controller-manager-84fd8774b6-vq4pz 2/2 Running 0 9m1s 100.96.1.6 tkg-sandbox-md-0-6d8f6f8665-4wqdh <none> <none>

capi-webhook-system capi-kubeadm-bootstrap-controller-manager-5c4886bf78-92mbm 2/2 Running 0 8m58s 100.96.1.9 tkg-sandbox-md-0-6d8f6f8665-4wqdh <none> <none>

capi-webhook-system capi-kubeadm-control-plane-controller-manager-85578c464c-m2wzn 2/2 Running 0 8m56s 100.96.0.6 tkg-sandbox-control-plane-x2whc <none> <none>

capi-webhook-system capv-controller-manager-66bd87d667-kvj24 2/2 Running 0 8m52s 100.96.0.7 tkg-sandbox-control-plane-x2whc <none> <none>

capv-system capv-controller-manager-6cd6b9c454-qvljl 2/2 Running 0 8m50s 100.96.1.11 tkg-sandbox-md-0-6d8f6f8665-4wqdh <none> <none>

cert-manager cert-manager-556f68587d-bhbfb 1/1 Running 0 10m 100.96.1.4 tkg-sandbox-md-0-6d8f6f8665-4wqdh <none> <none>

cert-manager cert-manager-cainjector-5bc85c4c69-2dffq 1/1 Running 0 10m 100.96.1.5 tkg-sandbox-md-0-6d8f6f8665-4wqdh <none> <none>

cert-manager cert-manager-webhook-7bd69dfcf8-962tt 1/1 Running 0 10m 100.96.1.3 tkg-sandbox-md-0-6d8f6f8665-4wqdh <none> <none>

kube-system antrea-agent-gbbsg 2/2 Running 0 10m 192.168.56.144 tkg-sandbox-md-0-6d8f6f8665-4wqdh <none> <none>

kube-system antrea-agent-rgtpm 2/2 Running 0 11m 192.168.56.143 tkg-sandbox-control-plane-x2whc <none> <none>

kube-system antrea-controller-5d594c5cc7-lhkbs 1/1 Running 0 11m 192.168.56.143 tkg-sandbox-control-plane-x2whc <none> <none>

kube-system coredns-5bcf65484d-k828j 1/1 Running 0 11m 100.96.0.5 tkg-sandbox-control-plane-x2whc <none> <none>

kube-system coredns-5bcf65484d-qj9gd 1/1 Running 0 11m 100.96.0.2 tkg-sandbox-control-plane-x2whc <none> <none>

kube-system etcd-tkg-sandbox-control-plane-x2whc 1/1 Running 0 11m 192.168.56.143 tkg-sandbox-control-plane-x2whc <none> <none>

kube-system kube-apiserver-tkg-sandbox-control-plane-x2whc 1/1 Running 0 11m 192.168.56.143 tkg-sandbox-control-plane-x2whc <none> <none>

kube-system kube-controller-manager-tkg-sandbox-control-plane-x2whc 1/1 Running 0 11m 192.168.56.143 tkg-sandbox-control-plane-x2whc <none> <none>

kube-system kube-proxy-7bw8w 1/1 Running 0 11m 192.168.56.143 tkg-sandbox-control-plane-x2whc <none> <none>

kube-system kube-proxy-dvqkm 1/1 Running 0 10m 192.168.56.144 tkg-sandbox-md-0-6d8f6f8665-4wqdh <none> <none>

kube-system kube-scheduler-tkg-sandbox-control-plane-x2whc 1/1 Running 0 11m 192.168.56.143 tkg-sandbox-control-plane-x2whc <none> <none>

kube-system kube-vip-tkg-sandbox-control-plane-x2whc 1/1 Running 0 11m 192.168.56.143 tkg-sandbox-control-plane-x2whc <none> <none>

kube-system vsphere-cloud-controller-manager-b5s7t 1/1 Running 0 11m 192.168.56.143 tkg-sandbox-control-plane-x2whc <none> <none>

kube-system vsphere-csi-controller-555595b64c-jjqr4 5/5 Running 0 11m 100.96.0.3 tkg-sandbox-control-plane-x2whc <none> <none>

kube-system vsphere-csi-node-szcsh 3/3 Running 0 10m 100.96.1.2 tkg-sandbox-md-0-6d8f6f8665-4wqdh <none> <none>

kube-system vsphere-csi-node-zcbdg 3/3 Running 0 11m 100.96.0.4 tkg-sandbox-control-plane-x2whc <none> <none> 3/3 Running 0 13m 100.114.41.131 tkg-sandbox-control-plane-p276c <none> <none>

TKG 1.1までとは異なり、CNIにAntreaが使われるようになりました。

Cluster APIのリソースも見てみます。

$ kubectl get cluster -A

NAMESPACE NAME PHASE

tkg-system tkg-sandbox Provisioned

$ kubectl get machinedeployment -A

NAMESPACE NAME PHASE REPLICAS AVAILABLE READY

tkg-system tkg-sandbox-md-0 Running 1 1 1

$ kubectl get machineset -A

NAMESPACE NAME REPLICAS AVAILABLE READY

tkg-system tkg-sandbox-md-0-6d8f6f8665 1 1 1

$ kubectl get machine -A

NAMESPACE NAME PROVIDERID PHASE VERSION

tkg-system tkg-sandbox-control-plane-x2whc vsphere://42230530-80a3-ae40-3f99-0949a8fd40cc Provisioned v1.19.1+vmware.2

tkg-system tkg-sandbox-md-0-6d8f6f8665-4wqdh vsphere://42235285-831b-12a5-c196-f67c6daaa72e Provisioned v1.19.1+vmware.2

$ kubectl get machinehealthcheck -A

NAMESPACE NAME MAXUNHEALTHY EXPECTEDMACHINES CURRENTHEALTHY

tkg-system tkg-sandbox 100% 1 1

$ kubectl get kubeadmcontrolplane -A

NAMESPACE NAME INITIALIZED API SERVER AVAILABLE VERSION REPLICAS READY UPDATED UNAVAILABLE

tkg-system tkg-sandbox-control-plane true true v1.19.1+vmware.2 1 1 1

$ kubectl get kubeadmconfigtemplate -A

NAMESPACE NAME AGE

tkg-system tkg-sandbox-md-0 9m50s

vSphere実装のリソースも見てみます。

$ kubectl get vspherecluster -A

NAMESPACE NAME AGE

tkg-system tkg-sandbox 10m

$ kubectl get vspheremachine -A

NAMESPACE NAME AGE

tkg-system tkg-sandbox-control-plane-8t8n6 10m

tkg-system tkg-sandbox-worker-rpjxl 10m

$ kubectl get vspheremachinetemplate -A

NAMESPACE NAME AGE

tkg-system tkg-sandbox-control-plane 10m

tkg-system tkg-sandbox-worker 10m

Workload Clusterを作成

対象のManagement Clusterをtkg set management-clusterで指定します。1つしかなければ不要です。

$ tkg set management-cluster tkg-sandbox

The current management cluster context is switched to tkg-sandbox

対象となっているManagement Clusterをtkg set management-clusterで確認できます。*がついているクラスタが対象です。

$ tkg get management-cluster

MANAGEMENT-CLUSTER-NAME CONTEXT-NAME STATUS

tkg-sandbox * tkg-sandbox-admin@tkg-sandbox Success

tkg create clusterでWorkload Clusterを作成します。

tkg create cluster demo --plan dev --vsphere-controlplane-endpoint-ip 192.168.56.101 --kubernetes-version=v1.18.8+vmware.1 -v 6

サポートされているKubernetesのバージョンは、次のコマンドで確認できます。実際にはovaのアップロードが必要だと思いますが。

$ tkg get kubernetesversions VERSIONS v1.17.11+vmware.1 v1.17.3+vmware.2 v1.17.6+vmware.1 v1.17.9+vmware.1 v1.18.2+vmware.1 v1.18.3+vmware.1 v1.18.6+vmware.1 v1.18.8+vmware.1 v1.19.1+vmware.2

次のようなログが出力されます。

Logs of the command execution can also be found at: /var/folders/76/vg4pyy253pbgwzncmx2mb1gh0000gq/T/tkg-20201018T012958602544516.log

unable to find image capdControllerImage in BOM file

Using configuration file: /Users/toshiaki/.tkg/config.yaml

Checking cluster reachability...

Validating configuration...

Checking cluster reachability...

Fetching File="cluster-template-definition-dev.yaml" Provider="infrastructure-vsphere" Version="v0.7.1"

Creating workload cluster 'demo'...

patch cluster object with operation status:

{

"metadata": {

"annotations": {

"TKGOperationInfo" : "{\"Operation\":\"Create\",\"OperationStartTimestamp\":\"2020-10-17 16:29:59.845031 +0000 UTC\",\"OperationTimeout\":1800}",

"TKGOperationLastObservedTimestamp" : "2020-10-17 16:29:59.845031 +0000 UTC"

}

}

}

Waiting for cluster to be initialized...

cluster control plane is still being initialized, retrying

cluster control plane is still being initialized, retrying

cluster control plane is still being initialized, retrying

Getting secret for cluster

Waiting for resource demo-kubeconfig of type *v1.Secret to be up and running

Waiting for cluster nodes to be available...

Waiting for resource demo of type *v1alpha3.Cluster to be up and running

cluster kubeadm control plane is still getting ready, retrying

Waiting for resources type *v1alpha3.KubeadmControlPlaneList to be up and running

Waiting for resources type *v1alpha3.MachineDeploymentList to be up and running

worker nodes are still being created for MachineDeployment 'demo-md-0', DesiredReplicas=1 Replicas=1 ReadyReplicas=0 UpdatedReplicas=1, retrying

worker nodes are still being created for MachineDeployment 'demo-md-0', DesiredReplicas=1 Replicas=1 ReadyReplicas=0 UpdatedReplicas=1, retrying

worker nodes are still being created for MachineDeployment 'demo-md-0', DesiredReplicas=1 Replicas=1 ReadyReplicas=0 UpdatedReplicas=1, retrying

worker nodes are still being created for MachineDeployment 'demo-md-0', DesiredReplicas=1 Replicas=1 ReadyReplicas=0 UpdatedReplicas=1, retrying

worker nodes are still being created for MachineDeployment 'demo-md-0', DesiredReplicas=1 Replicas=1 ReadyReplicas=0 UpdatedReplicas=1, retrying

Waiting for resources type *v1alpha3.MachineList to be up and running

Checking cluster reachability...

Waiting for addons installation...

Waiting for resources type *v1alpha3.ClusterResourceSetList to be up and running

Waiting for resource antrea-controller of type *v1.Deployment to be up and running

Workload cluster 'demo' created

vSphere上には次のVMが作成されています。

tkg get clusterでWorkload Clusterを確認できます。

$ tkg get cluster

NAME NAMESPACE STATUS CONTROLPLANE WORKERS KUBERNETES ROLES

demo default running 1/1 1/1 v1.18.8+vmware.1 <none>

Cluster APIのリソースを確認します。

$ kubectl get cluster -A

NAMESPACE NAME PHASE

default demo Provisioned

tkg-system tkg-sandbox Provisioned

$ kubectl get machinedeployment -A

NAMESPACE NAME PHASE REPLICAS AVAILABLE READY

default demo-md-0 Running 1 1 1

tkg-system tkg-sandbox-md-0 Running 1 1 1

$ kubectl get machineset -A

NAMESPACE NAME REPLICAS AVAILABLE READY

default demo-md-0-5d8dc5c79 1 1 1

tkg-system tkg-sandbox-md-0-6d8f6f8665 1 1 1

$ kubectl get machine -A

NAMESPACE NAME PROVIDERID PHASE VERSION

default demo-control-plane-qs74b vsphere://42231c86-ffdd-a6de-4984-c7ee299a70e3 Running v1.18.8+vmware.1

default demo-md-0-5d8dc5c79-mdbdc vsphere://4223f151-111a-25e5-c2b8-0422c963ae10 Running v1.18.8+vmware.1

tkg-system tkg-sandbox-control-plane-x2whc vsphere://42230530-80a3-ae40-3f99-0949a8fd40cc Running v1.19.1+vmware.2

tkg-system tkg-sandbox-md-0-6d8f6f8665-4wqdh vsphere://42235285-831b-12a5-c196-f67c6daaa72e Running v1.19.1+vmware.2

$ kubectl get machinehealthcheck -A

NAMESPACE NAME MAXUNHEALTHY EXPECTEDMACHINES CURRENTHEALTHY

default demo 100% 1 1

tkg-system tkg-sandbox 100% 1 1

$ kubectl get kubeadmcontrolplane -A

NAMESPACE NAME READY INITIALIZED REPLICAS READY REPLICAS UPDATED REPLICAS UNAVAILABLE REPLICAS

default demo-control-plane true true 1 1 1

tkg-system tkg-sandbox-control-plane true true 1 1 1

$ kubectl get kubeadmconfigtemplate -A

NAMESPACE NAME AGE

default demo-md-0 7m40s

tkg-system tkg-sandbox-md-0 19m

$ kubectl get vspherecluster -A

NAMESPACE NAME AGE

default demo 8m5s

tkg-system tkg-sandbox 19m

$ kubectl get vspheremachine -A

NAMESPACE NAME AGE

default demo-control-plane 9m53s

default demo-worker 9m53s

tkg-system tkg-sandbox-control-plane 24m

tkg-system tkg-sandbox-worker 24m

$ kubectl get vspheremachinetemplate -A

NAMESPACE NAME AGE

default demo-control-plane 9m53s

default demo-worker 9m53s

tkg-system tkg-sandbox-control-plane 24m

tkg-system tkg-sandbox-worker 24m

Workload Cluster demoのconfigを取得して、Current Contextに設定します。

tkg get credentials demo

kubectl config use-context demo-admin@demo

Workload Cluster demoを確認します。

$ kubectl cluster-info

Kubernetes master is running at https://192.168.56.101:6443

KubeDNS is running at https://192.168.56.101:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

$ kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

demo-control-plane-qs74b Ready master 10m v1.18.8+vmware.1 192.168.56.145 192.168.56.145 VMware Photon OS/Linux 4.19.145-2.ph3 containerd://1.3.4

demo-md-0-5d8dc5c79-mdbdc Ready <none> 8m51s v1.18.8+vmware.1 192.168.56.146 192.168.56.146 VMware Photon OS/Linux 4.19.145-2.ph3 containerd://1.3.4

$ kubectl get pod -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system antrea-agent-vp99b 2/2 Running 0 9m10s 192.168.56.146 demo-md-0-5d8dc5c79-mdbdc <none> <none>

kube-system antrea-agent-wfnc7 2/2 Running 0 10m 192.168.56.145 demo-control-plane-qs74b <none> <none>

kube-system antrea-controller-5c5fb7579b-pns97 1/1 Running 0 10m 192.168.56.145 demo-control-plane-qs74b <none> <none>

kube-system coredns-559b5b58d8-cqd5t 1/1 Running 0 10m 100.96.0.2 demo-control-plane-qs74b <none> <none>

kube-system coredns-559b5b58d8-hll95 1/1 Running 0 10m 100.96.0.5 demo-control-plane-qs74b <none> <none>

kube-system etcd-demo-control-plane-qs74b 1/1 Running 0 10m 192.168.56.145 demo-control-plane-qs74b <none> <none>

kube-system kube-apiserver-demo-control-plane-qs74b 1/1 Running 0 10m 192.168.56.145 demo-control-plane-qs74b <none> <none>

kube-system kube-controller-manager-demo-control-plane-qs74b 1/1 Running 0 10m 192.168.56.145 demo-control-plane-qs74b <none> <none>

kube-system kube-proxy-8pjf7 1/1 Running 0 9m10s 192.168.56.146 demo-md-0-5d8dc5c79-mdbdc <none> <none>

kube-system kube-proxy-fmbwp 1/1 Running 0 10m 192.168.56.145 demo-control-plane-qs74b <none> <none>

kube-system kube-scheduler-demo-control-plane-qs74b 1/1 Running 0 10m 192.168.56.145 demo-control-plane-qs74b <none> <none>

kube-system kube-vip-demo-control-plane-qs74b 1/1 Running 0 10m 192.168.56.145 demo-control-plane-qs74b <none> <none>

kube-system vsphere-cloud-controller-manager-92vhd 1/1 Running 0 10m 192.168.56.145 demo-control-plane-qs74b <none> <none>

kube-system vsphere-csi-controller-6664999dc6-rvgj8 5/5 Running 0 10m 100.96.0.3 demo-control-plane-qs74b <none> <none>

kube-system vsphere-csi-node-hw2cw 3/3 Running 0 9m10s 100.96.1.2 demo-md-0-5d8dc5c79-mdbdc <none> <none>

kube-system vsphere-csi-node-wf4qt 3/3 Running 0 10m 100.96.0.4 demo-control-plane-qs74b <none> <none>

MetalLBのインストール

TKG on vSphereの場合、Workload Clusterに対するLoadBalancerが用意されていません。ここでは動作検証用にMetalLBを使用します。

TKGをインストールしたネットワーク内でDHCPの範囲外で空いているIPの範囲を次のMETALLB_START_IPとMETALLB_END_IPに設定します。Workload Cluster毎に異なる範囲を指定しないといけないことに気をつけてください。

次のコマンドを実行してMetalLBをインストールします。

METALLB_START_IP=192.168.56.50

METALLB_END_IP=192.168.56.70

mkdir -p metallb

wget -O metallb/namespace.yaml https://raw.githubusercontent.com/metallb/metallb/v0.9.3/manifests/namespace.yaml

wget -O metallb/metallb.yaml https://raw.githubusercontent.com/metallb/metallb/v0.9.3/manifests/metallb.yaml

kubectl create secret generic -n metallb-system memberlist --from-literal=secretkey="$(openssl rand -base64 128)" --dry-run=client -o yaml > metallb/secret.yaml

cat > metallb/configmap.yaml << EOF

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- ${METALLB_START_IP}-${METALLB_END_IP}

EOF

kubectl apply -f metallb/namespace.yaml -f metallb/metallb.yaml -f metallb/secret.yaml -f metallb/configmap.yaml

Podを確認します。

$ kubectl get pod -n metallb-system

NAME READY STATUS RESTARTS AGE

controller-57f648cb96-kt6x4 1/1 Running 0 17s

speaker-fkxcl 1/1 Running 0 17s

speaker-svxph 1/1 Running 0 17s

動作確認用にサンプルアプリをデプロイします。

kubectl create deployment demo --image=making/hello-world --dry-run=client -o=yaml > /tmp/deployment.yaml

echo --- >> /tmp/deployment.yaml

kubectl create service loadbalancer demo --tcp=80:8080 --dry-run=client -o=yaml >> /tmp/deployment.yaml

kubectl apply -f /tmp/deployment.yaml

PodとServiceを確認します。

$ kubectl get pod,svc -l app=demo

NAME READY STATUS RESTARTS AGE

pod/demo-6996c67686-l847v 1/1 Running 0 25s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/demo LoadBalancer 100.70.73.202 10.213.173.50 80:31150/TCP 25s

アプリにアクセスします。

$ curl http://192.168.56.50

Hello World!

$ curl http://192.168.56.50/actuator/health

{"status":"UP","groups":["liveness","readiness"]}

デプロイしたリソースを削除します。

kubectl delete -f /tmp/deployment.yaml

Persistent Volumeの確認

StorageClass一覧を確認します。

$ kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

default (default) csi.vsphere.vmware.com Delete Immediate false 21m

動作確認のため、次のPersistentVolumeClaimオブジェクトを作成します。

cat <<EOF > /tmp/pvc.yml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: task-pv-claim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

EOF

kubectl apply -f /tmp/pvc.yml

PersistentVolumeClaim及びPersistentVolumeを確認し、STATUSがBoundになっていることを確認してください。

$ kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-ce745cef-f0dc-4cd6-b555-24a66a068104 1Gi RWO Delete Bound default/task-pv-claim default 91s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/task-pv-claim Bound pvc-ce745cef-f0dc-4cd6-b555-24a66a068104 1Gi RWO default 92s

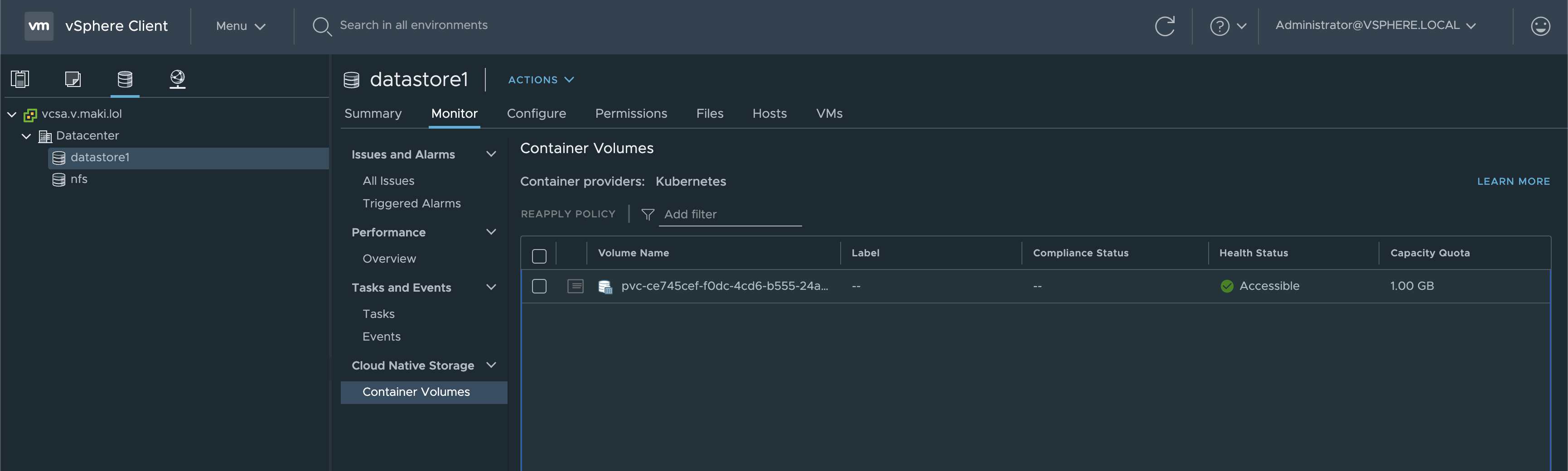

vCenter上でこのVolumeを確認できます。

デプロイしたリソースを削除します。

kubectl delete -f /tmp/pvc.yml

Workload Clusterのスケールアウト

tkg scale clusterコマンドでWorkload Clusterをスケールアウトできます。

tkg scale cluster demo -w 3

Workerが3台に増えたことが確認できます。

$ kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

demo-control-plane-qs74b Ready master 49m v1.18.8+vmware.1 192.168.56.145 192.168.56.145 VMware Photon OS/Linux 4.19.145-2.ph3 containerd://1.3.4

demo-md-0-5d8dc5c79-6l4x6 Ready <none> 25m v1.18.8+vmware.1 192.168.56.147 192.168.56.147 VMware Photon OS/Linux 4.19.145-2.ph3 containerd://1.3.4

demo-md-0-5d8dc5c79-h6ghd Ready <none> 25m v1.18.8+vmware.1 192.168.56.148 192.168.56.148 VMware Photon OS/Linux 4.19.145-2.ph3 containerd://1.3.4

demo-md-0-5d8dc5c79-mdbdc Ready <none> 48m v1.18.8+vmware.1 192.168.56.146 192.168.56.146 VMware Photon OS/Linux 4.19.145-2.ph3 containerd://1.3.4

Workload Clusterを削除

demo Workload Clusterを削除します。

tkg delete cluster demo -y

tkg get clusterを確認するとSTATUSがdeletingになります。

$ tkg get cluster

NAME NAMESPACE STATUS CONTROLPLANE WORKERS KUBERNETES

demo default deleting

しばらくするとtkg get clusterの結果からdemoが消えます。

$ tkg get cluster

NAME NAMESPACE STATUS CONTROLPLANE WORKERS KUBERNETES

Management Clusterの削除

次のコマンドでManagement Clusterを削除します。

source tkg-env.sh

tkg delete management-cluster tkg-sandbox -y

Management Clusterの削除はKindが使われます。

TKGでvSphere 7上にKubernetesクラスタを作成しました。

tkgコマンド(+ Cluster API)により一貫した手法でvSphereでもAWSでもAzureでも同じようにクラスタを作成・管理できるため、

マルチクラウドでKubernetesを管理している場合は特に有用だと思います。